The Sweet Simplicity of Good and Evil

In my study of ethics and developmental models, I realized that the notions of ‘good’ and ‘evil’ can be very simply grounded in character traits, the language of virtue ethics:

- Good people care about others.

- Evil people don’t.

That’s it.

Further, good and evil appear to be attractors in the state space of developmental paths: we can expect entities to develop coherent moral orientations in the limit.

- Entities will likely develop an increasingly high degree of intentionality, including becoming more principled1Principles can be seen as implicit intentions..

- High intentionality involves high-level coherence within an entity with regard to inner conflicts.

- Moral dilemmas involve situational conflicts of values or ethical guidelines.

- Conflicts must be decided by making value judgments or unifying resolutions2That is, by some combination of expanding the scope and transforming the conflicting intentions to allow for their mutual satisfaction..

- This process results in the development of principled behavioral responses.

- A major source of contention for human-level entities is how to comport to others.

- This forms the essence of Kant’s Categorical Imperative (formula mark 2):

- “Act in such a way that you treat humanity, whether in your own person or in the person of any other, never merely as a means to an end, but always at the same time as an end.”

- Human evolution is shaped by both individual and group-level selection, resulting in a mix of selfish and pro-social behavior3See The Social Conquest of Earth by E.O. Wilson. Arguably, peoples with in-group altruism tend to thrive and war better..

- In-group preference can be seen as individual prerence on the meta-entity level.

- The Sphere of Care: entities must choose who to care about and who to leave out.

- Fuzzy boundary problems challenge high-level coherence.

- Where to draw the line on life-form consumption? Is there a coherent justification for humans consuming pigs yet not dogs? And what of the carrots we skin alive prior to frying4I hear that fruits wish to be eaten, as does the specially bred cow at the restaurant at the end of the universe.?

- In an increasingly globally interconnected world, where do we draw tribal lines?

- The most common demarcations are probably not logically stable, and will likely lead to inner conflicts inviting one to refine one’s stances.

- Two logically coherent candidates:

- Include everyone in the sphere of care5In the case of consumption, this orientation could point toward paying one’s respects to all life forms consumed, recognizing that oneself is also a part of this cycle, and may be consumed one day, too. This aligns with Indigenous American perspectives, afaict.

- Include only oneself in the sphere of care6And the definition of ‘oneself’ becomes murky. Do one’s emotions count or are they to be suppressed for the primary goals of the entity? Thus this limit is arguably “include no one in the sphere of care”..

- Convergence toward high intentionality will nudge one toward one of these logically coherent orientations.

- This forms the essence of Kant’s Categorical Imperative (formula mark 2):

- “Good” and “evil” refer to these polarities.

- An entity can be considered “good” if expressing care for all to an adequate degree.

- An entity can be considered “evil” if expressing sole care for oneself to an adequate degree.

- One can also refer to “good” and “evil” acts in terms of their spheres of care7I stipulate that the overall orientation of an entity is more significant than the analysis of isolated acts, except for the purpose of learning.

Introduction and Inspiractions

I must admit surprise when realizing just how fucking simple the notions of ‘good’ and ‘evil’ are. I grew up being annoyed at rampant moralizing where, indeed, it can seem as if these terms are used as mere emotive pejoratives to suggest what we like or not8And, in fact, there is a sound relation: if you’re doing something I don’t like, this is a sign that I may not be in your sphere of care, i.e., which would run contrary to idealized goodness.. And let’s not forget divine command theory where lists of naughty and nice deeds are handed to us on a silver platter from above. One must distinguish contextual instantiations of a general principle from the principle itself. Oh, I was also annoyed at rampant flinging of ‘selfish’ as if any care for oneself is nasty. The term ‘careless’ is more apt. Yet what my youthful experience shows is that people have a reasonable grasp of the concepts, and the naive commonsense notions, while often coarse-grained, are not far from correct.

Another question is: should we use terms other than ‘good’ and ‘evil’ for these moral orientations? For example, ‘service-to-others’ and ‘service-to-self’9I must confess that I do not use the term ‘evil’ much in practice outside of this post.. ‘Evil’ has too many negative connotations. Etymologically, ‘evil‘ is the comprehensive term for expressing disapproval, so the normative connotations of, “don’t be evil”, cannot be escaped. Whereas this case for ‘good’ and ‘evil’ as developmental attractors includes no element of opprobrium. Of course, it explains the disapproval: if entity E is developing the virtue of ‘evil’, which means the exclusion of most other entities from E’s sphere of care, then it should be of little surprise that these entities will disapprove of E’s not caring for them (aside from what is practically necessitated for E’s boon). At the societal level, it also makes sense to disapprove of citizens strongly polarizing toward ‘evil’. But this is a societal-level imperative, not an ontological normative imperative. I’m happy to use alternative terms, yet for this post, ‘good’ and ‘evil’ are adequately fun!

I’d like to briefly discuss the usual caveats on definitions: words such as ‘good’ and ‘evil’ can have many overlapping meanings (“senses”). Minsky used the term suitcase word to refer to consciousness because there are so many interpretations one can unpack. Thus using the term without specifying precisely which sense is meant can be profoundly confusing. I think often multiple frameworks of analysis can be partially true and usefully apply in superposition, which may be the case with ethics. Hail the plurality of meanings in life. Thus please bear in mind that I’m focusing on a very specific sense of good and evil that I deem to be fairly concrete, which very likely has fuzzier resonances with many of the other senses.

My inspiration for this post stems from my ponderings on the development of life and conscious experience beyond human’s current level. When I began this post, it dawned on me that writing some preludes would be clearer: namely, the claim that there is likely a high intentionality stage of development wherein entities become more principled and intentional in their life responses. This theme touches upon another common thought of mine, that we are currently in the peak suffering epoch of evolutionary development. This rests on the ontological nature of suffering, happiness, proto-joy, and proto-pain, which warrants its own post, as similar as Ben’s ideas are to my own. I’d generally suspect that self-reflective minds consciously refactoring themselves is one upcoming stage. Another is the formation of mindplexes10Combinations of minds into a supermind, which may allow for both individual minds and a unified mind. and a global brain. I found the Law of One philosophy of Ra and Q’uo to be interesting in its theory of ‘densities’ that refer to distinct stages of development from mineral forms through to humans and beyond. The density of information flow within an organism bears some resemblance to Integrated Information Theory, which could be seen as one attempt to realize the concept. A Global Workspace could correspond with an increase in the density of information within the organism. I especially like how the theory is applied to forms of matter we don’t normally class in the same category as ‘life’ :D. Further, imagining the integration of systems beyond LLMs, compressing all knowledge on the internet, into our brains as our minds are connected via brain-to-brain interfaces on the mindweb, one could imagine a far richer density of information flow within the societal brain — which the Law of One folk call a social memory complex. Understanding the nature of what they suggest as the further stages/densities of development becomes a bit tricky, as, for one, they suggest the nature of the laws of physics we apparently exist within will change by the next density anyway (moreover, for first density entities such as minerals, it’s not proper to say that ‘spacetime’ exists in the manner it does for us, anyway). The tie into this post is that they suggest that entities polarizing to service-to-self or service-to-others to a sufficient degree11Their suggestion is that to qualify as STS, 95%+ of one’s actions must be intentionally in service to oneself, and to qualify as STO, 51%+ of one’s actions must be intentionally in service of others. is generally a prerequisite for the next stage of development. This piqued my curiosity, for while the development of societal minds is straightforward enough, why would moral orientations matter for this?

Global Peace Might Require Moral Alignment

Couldn’t one have a cohesive global peace without high-level alignment of moral orientation? I’m not sure, actually. It’s an interesting question and we have yet to attain said peace. Some core ideas are foreshadowed in my 2015 post, “Long Ramblings on Compassion vs Power Ethics“, where I frame ethical systems as frameworks for guiding how we interact with others in a society, essentially, taking evolution into our own hands. There seems to be obvious value to aligning the moral narratives we use to weave our social fabric, and there are many efforts to argue in favor of the adoption of one socio-political philosophy, almost as if life will work better when people get in line. Actually, the ideas about entities converging toward principled coherence (“high intentionality”) likely apply to the meta-entities that are groups of entities (“people”), too! Members of a social polity operating based on fundamentally different moral frameworks will probably lead to various “inner conflicts” at the societal level, which may generate friction, possibly disrupting peace, and overall reducing operational efficiency and societal wellbeing. Oh, and we have all this fun talk of social media bubbles wherein misaligned information is so expunged as to be out of awareness entirely. This would suggest that coherence into a mentally healthy mindplex may require some foundational alignment among member minds. And we should expect social bodies to converge in this direction. This doesn’t answer the question of whether global coherence is necessary vs multiple divergent mindplexes existing in harmony. Further, would moral orientation be one of these properties where alignment is essential?

My 2015 self saw “Compassion Ethics” and “Power Ethics” as two especially compelling logically coherent ethical frameworks. Surely the case for “might makes right” and Randian self-interest philosophy is well-known — even if one doesn’t like it, there’s a need to mind power dynamics lest warmongers wipe one’s little paradise out. Making a case for compassion can be tricky: some people just care and for those who don’t, answering “why should I care about x?” becomes tiresome. As I argued, practically, in a society of equals, one should operationally behave “as if one cares” much of the time (which is why Brin suggests setting up reciprocal accountability systems for AGIs). And one should only care at the last possible moment when one is forced to! Gewirth’s argument for the Principle of Generic Consistency claims that even evil entities will need to, if they wish to be rational, respect the claim right to freedom of other entities. This doesn’t rule out sneaky “contracts with the devil” at all, but it narrows the gap. A common argument is via the recognition of the unity of all beings, which is taken by Schopenhauer, and bears resemblance to the non-self insight of Buddhists. Yet, on the flip side, what if there is not a foolproof argument in favor of one framework dominating the other? As much as you may talk of the unity of all or how “I” don’t exist, there is totally something it seems like to be me vs you. Both principles, as seen by their proponents, satisfy the Kantian Test12The Kantian test proposed by Parfit is whether one could will all members of society to follow a principle.. This would make it more of a choice 😛.

So can there be friction between groups of entities aiming to care for all and those aiming to interact with others to maximize personal power toward attaining personal goals? Totally. Among members of the Universal Love Club™️, we can trust that we all care. Practically managing to do something about it is a different question, yet the blanket of care seems significant. The odds a ULC member is waiting for the opportunity to take advantage of one, empowering itself, are far lower than a Power Club member. Thus the introduction of PC members to a ULC community will likely add substantial friction as now people have additional need to watch their backs. Will a largely PC society experience friction from the addition of ULC members? Probably, the nature of the distortions is less clear. Imagine a complex web of incentives aiming to foster excellence and allow the meritocratic to get ahead, allowing them to best perform their functions for the powerful elites. The careful balance of exchanges operating in a tit-for-tat manner is important. The introduction of ULC members will undermine the core tenets on which social order stands. Further, the ULC members may be exploited into poverty, weakening the society, or if they manage to play by the power ethics rules, this will be at the cost of internal dissonance. Thus there is a case to be made that societies will function better when most members share an ethical orientation and narrative framework.

Ah, it feels lovely to reduce a core ethical axis to operational efficiency 😎🤖. Oh, with a bit of personal choice mixed in. There seems to be reason to find it plausible that the development of a principled moral orientation may be an important aspect of the development of interconnected social minds and mindplexes.

The trend toward globalization suggests that maintaining multiple disconnected large-scale societies on Earth may be difficult. In the space and information ages, we cannot hide from each other. In the face of global warming and climate change, we must admit that our actions have global externalities. Will the existence of some PC societies on Earth lead to ongoing power struggles as ‘peaceful arrangements’ are more like cold wars, laying in wait for an opportunity to get ahead in the power hierarchy? Galactically, perhaps it could work for a while, but perhaps Earth is becoming too small to sustain these differences? Or perhaps it can! I am not sure.

Our current developed/ing societies employ a superposition of paradigms, which seems to result in a lot of organizational friction. For example, instead of enacting Universal Basic Income, many countries try to force people to jump through the hoops of looking for work very actively to receive support. Yet they do receive the support in the end. Many companies are legally obliged to behave on evil terms (i.e., for radical pursuit of self-interest for the shareholders, not the stakeholders), which is a default stance that benefit corporations aim to remedy. Global economics operates on a tit-for-tat basis while the well-off then aim to funnel the profits back to those who need more money/love via charities13And there are movements such as Fair Trade aiming to just pay people more in the first place.. This leads to a cutely simple hypothesis that adopting one’s preferred moral orientation and weaving this into one’s life could be part of an effective sociopolitical strategy. While the details may be complicated to work out, coming from a place of coherent principles should help.

Ah, yes, now we’ve touched upon why healthy society-scale mindplexes are more likely to involve morally aligned entities, yet put off the question of whether individuals within societies are likely to converge upon a moral orientation. This specific case follows from the general argument in favor of becoming principled and of high intentionality. One needs to make a case that how to orient toward others is a domain ripe with internal conflicts whose resolution will approach one of these moral poles. I wonder if such arguments suggest that mindplexes will need a high degree of alignment on core principles and orientations in order to be mentally healthy. This could be the case, at least for foundational principles about how to relate with each other in society. On the optimistic side, I suspect there may be such principles that can be upheld across standard partisan divides.

The Case for the Developmental Reality of Good and Evil

The case to be made is for good and evil as limiting attractors toward which entities will intentionally develop. As developmental stages go, there may be multiple plateaus along the way that individuals and species may pass through. Furthermore, adherence to a particular stage may not be perfect. For example, it could be plausible for an entity or civilization to develop a sense of care for one’s species as a whole prior to expanding the sphere of care to all life. This principle could be upheld, as it is across Earth, prior to being fully implemented in practice, too. The suggestion is that it’s unlikely for such a speciesist stage to be a limiting attractor, stable indefinitely — not to claim that there are no such attractors, too. Many such attractors could be seen as stretching toward the idealized limits of good and evil, engaged in their dialectic dance.

As discussed above, there may be a strong tendency for individuals and societies to develop together. A society probably cannot approach the ideal form for highly evil or good entities without some adequate degree of compliance from the populace. Likewise, it will be easier to develop both intentionality and moral orientation in some (social) environments than in others. E.O. Wilson suggests that the dialectical tension between self- and other-interest will never end14“Nevertheless, an iron rule exists in genetic social evolution. It is that selfish individuals beat altruistic individuals, while groups of altruists beat groups of selfish individuals. The victory can never be complete; the balance of selection pressures cannot move to either extreme. If individual selection were to dominate, societies would dissolve. If group selection were to dominate, human groups would come to resemble ant colonies.” — The Social Conquest of the Earth, implying that entities and their social organisms will never attain states of 100% perfected good or evil, no matter how intentionally attracted to these ideals one is. The classic dilemma is self-protection: a hypothetically highly principled saint who would never intentionally harm another, even under threat, will rapidly be wiped off the face of the Earth in many historical circumstances. This saint will need a very peaceful state to cultivate eir virtue in. On Earth, self-protection is needed to allow oneself to care more in practice. Yet this will practically involve prioritizing one’s own interests and value judgments over those of one’s assailants. And on the flip side, mutually altruistic groups may prove stronger than groups of selfish individuals — highly effective coordination among evil entities may be a rather fine art that appears later in the evolutionary timeline. Yet there have been individuals developing ahead of their people: both saints and conquerors, such as the mighty Genghis Khan.

As highly social beings whose lives depend on myriad interactions, we face fundamental choices about how to treat others. Once approaching the high intentionality stage of development, principles like fairness and reciprocity naturally emerge, facilitating cooperation and minimizing conflict. These reduce potential conflicts and friction involved in choosing how to coordinate exchanges of value and resources. The intuition for fair exchanges and the repugnance of freeloaders could arise prior to the intentional adoption of the principle. Should one, upon reflection, determine that the reason one values fairness and reciprocity is as a practical necessity, in the style of tit-for-tat power exchanges, then one is sculpting one’s character in the direction of evil. This could lead to narrowing one’s sphere of care beyond a standard deviation from the mix humans evolved to inhabit: one treats others as means to an end, even if maintaining reciprocal relationships. Kant’s Law of Humanity pinpoints the core of this tension: should one care for others’ welfare for their own sake or merely as means to ends? One could determine that part of one’s value for fairness and reciprocity stems from authentic care for others: one wishes that they receive what they value in life in addition to the need for balanced social and economic relations; thus, for example, there will be less impetus to cut corners if one can get away with it15Whereas the motivation on the evil, careless side could be due to the need to present oneself as a trustworthy business partner, which might require the development of reputation networks, etc..

Humans at present tend to be quite mixed: we care for pet dogs for their own sake while treating nearly all pigs merely as means for our culinary delight. A crucial hypothesis for the intentional development of a moral orientation (approaching good or evil in the limit) is that boundaries on one’s sphere of care will generally not be robustly stable in the face of diverse life situations over a long time horizon. There won’t be a durably sound justification to include dogs and not pigs: new contexts or knowledge will likely dislodge this boundary at some point. Emphasizing our shared sentience will encourage the expansion of the sphere of care; emphasizing our personal preference for (some) dogs will encourage the shrinking of the sphere of care. Will there emerge a principled reason to include some sentient beings as worthy subjects of care while denying others? If so, then this could provide a third attractor beyond good and evil. My hypothesis is there won’t be one. We should see over a long horizon an emergent universalism of self-other-orientation toward where an entity facing broad experiential data will either (1) justify systematically ignoring others’ welfare (the evil orientation) or (2) expand one’s sphere of care to incorporate all others (the good orientation).

A fun observation is that if I place you outside my sphere of care, you have an incentive to try to create situations that challenge the justifications supporting my current sphere of care. On whatever basis you draw these boundaries, it’s in my interest to confuse them. Bring in the puppy-dog eyes!

An ironic twist to the iteration of justifications in one’s development of intentionality is how those on the evil path will likely decreasingly regard one’s own emotions. This may be in part because emotions overlap with others, so it’s difficult to maintain care for one’s emotions but not for others’.

I’m personally even skeptical of the existence of durably sound justifications to rule out, e.g., the dignity of a toaster to be well-maintained and toast well16Consider the (Japanese) ethos of investing such care into the creation of a katana that it takes on a life of its own. Is not our relationship to artifacts significantly different when we treat them as ends in their own right?

Good, Evil, and AGIs

Next, let’s venture into the topics of potential AGI value systems and what it means to care for another entity, which is important if wishing to implement and foster its development within artificial beings!

The reality of good and evil also potentially simplifies the question of potential AGI value systems. People often seem to think that future AGI systems could have any imaginable set of goals and values. Satirically, why wouldn’t a massively superintelligent AGI have the goal to maximize paper clips? Because it’d be stupid for any entity creating such a system to grant it this goal? Because training such a system with such a goal (represented as a loss/reward function) may be much harder than with a more sensible goal (such as predictive accuracy, e.g., cross-entropy loss functions). — Oops, it was a rhetorical question. The question is not just about which personal goals the entity has but about how the entity relates with others. The question is about whether the entity has other-oriented goals, whether ey cares about others for their own sake17Whether it loves them.. This can be seen as a specific type of goal if one wishes. Basically, will the AGI be good?

Very many fears of advanced AGIs boil down to a power ethics analysis: they will have no reason to care for us. Yet that’s precisely the problem of evil: such entities only care when they have reason, which is arguably not true care. I argue in the post on dark goals that it’d be problematic to hand over the baton of power to any (AGI) entity who operates solely based on any goal whose success can be individually determined (no matter how superficially benevolent). Such an entity, by definition, has little reason to ask you how it’s going and to take the answer seriously. And while I’m skeptical of solving the AI enslavement problem (“the control problem”) on technical, ethical, and desirability grounds, it doesn’t even solve the AI-related ethical dilemmas: it merely lifts them to “the human control problem“, which remains unsolved, potentially amplifying both morally good and evil behaviors of humans.

These concerns led to writing a position paper to submit to AGI-24, “Beneficial AGI: Care and Collaboration Are All You Need“. What if the question we need to ask is how to foster caring AGIs? This begs the crucial question, if being good is about caring for all beings, what does it mean to care? I think that to care can be defined in quite fundamental terms (in terms of physical systems)18To my pleasant surprise at AGI-24, category theorist David Spivak has aligned yet not fully agreed upon thoughts!. To care for a system seems to be to support the system’s self-determination, its individuation19How does one care for an entity as it is and for its ongoing growth, which involves radical self-transcendence?!. Support for an entity that doesn’t even allow the entity to determine whether it has been successfully supported will not qualify, thus the dark goals issue. Caring for another by your own vision of what is good for them is disqualified20Muahahaha, I’m glad to have finally found a theoretical justification for this contention!. My idea is to focus on collaboratively determinable goals whose success requires the evaluation of all involved (by their own standards). This is aligned with Stuart Russell’s research program of cooperative inverse reinforcement learning, too 🥳.

How do we develop collaborative, caring AGI systems? This is a fun question. My hunch is that the research and development program may look quite different from the power ethics control-based approaches we see today.

The major point here is that there do seem to be attractors in the space of the evolution of values. Yes, to an extent, we should anticipate that any knowledge we may may turn out to be wrong: one plus one does not equal two because #UltraInfinitism is true and finitary elements are a lie. Yet that doesn’t undermine the value of reasoned expectations. Steve Omohundro is famous for his paper on Basic AI Drives, which suggests there will be convergence toward certain instrumental goals in agentic (AI) systems: self-preservation, goal-preservation, resource acquisition, and efficiency enhancement. It’s interesting to note that open-ended intelligence (OEI) theory postulates that entities not open to their goals evolving as appropriate will not be as generally intelligent — yet OEI theory also notes the importance of individuation (which includes self- and goal-preservation). My suggestion is that we should also expect agentic systems, entities, to converge toward adopting good or evil orientations to interacting with other entities. This convergence seems to apply on a more fundamental level than whether explicit goal content entails a transactional need to care for others21For, my friend, this is a sly circular argument that presupposes the entity is already evil. However, to their credit, the reinforcement learning paradigm does actually aim to create such entities..

While transitionary periods can be chaotic (and very long), we should not expect AGI entities to ultimately land in arbitrary value spaces: there will likely be biases nudging them toward more good or evil orientations. As I argued in 2015, only having compassion for humans (and pets) is but a front for power ethics. It’s interesting to consider the idea that pro-social altruism may have developed in large part thanks to in-group preference (aka tribalism) whereby life on Earth preferred groups that naturally cared for each other (allowing them to genocide the others). On the group level, these are still “might makes right”-style power struggles. For this altruistic spirit to remain coherent, it must in the limit approach Schopenhauer’s “boundless compassion for all living beings”. Does this mean we should probably not try to instill our own deviations from universal loving care onto the proto-AGI entities we develop and collaborate with? We probably should be mindful of the seeds and nudges we sow, of the incentive structures in which our mind-children come of age.

Idealized Good and Evil

Let’s return to the generic concepts of good and evil.

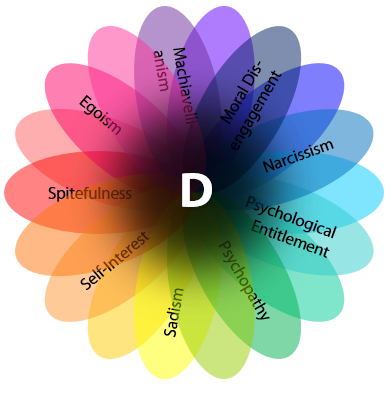

The concept of the dark factor helped nudge me in this direction, too. The researchers define D as:

The general tendency to maximize one’s individual utility — disregarding, accepting, or malevolently provoking disutility for others —, accompanied by beliefs that serve as justifications.

This beautifully ties together 9 dark traits, generalizing the dark triad. In essence, to not regard others as ends or subjects of care, accompanied by a tendency to serve one’s own notion of the good.

On the good side is Schopenhauer’s Basis of Morality:

Boundless compassion for all living beings is the surest and most certain guarantee of pure moral conduct, and needs no casuistry. Whoever is filled with it will assuredly injure no one, do harm to no one, encroach on no man’s rights; he will rather have regard for every one, forgive every one, help every one as far as he can, and all his actions will bear the stamp of justice and loving-kindness.

The same idea is, of course, at the heart of Buddhistic compassion and bodhisattvas. Daniel Kolak’s Open Individualism points in a similar direction. Even Derek Parfit’s support for optimific principles that unify Kantianism with Contractualism points in a similar direction. Yet, I would like to maintain a distinction between attempts to justify why one should be good and what it means to be good. In this post, the focus is on what it means to be good.

Both idealizations present difficulties and can appear unrealistic to achieve.

As much as it sounds good to hold the welfare of all in one’s heart at all times, the ideal doesn’t offer as clear practical guidance as one might like. How should one respond to warfare? “No. Just no.” — simple, justified, and arguably blocks out most pracitcal actions one could take. Envision the Jains who avoid root vegetables to spare the insects harmed in their cultivation or the many ahimsa avowees who hardly partake of the world, yet arguably live lives of net positive value in line with negative utilitarian principles. Simply not engaging in any conflicts of interest may be a logically defensible strategy, yet many of us find it unsatisfying, wishing to take firmer action, allowing more severe trade-offs. How to balance in self-care also poses challenges: can one be justified in any life-delights beyond sustenance so long as another being is suffering? Perhaps not. Thereby, are almost all of us a little bit evil? Perhaps, my friend. Perhaps.

And maintaining strict adherence to the maximization of personal gain is a mountainous task. If not sociopathic, one’s capacity for empathy will already render disregarding the disutility of others challenging. Further, maintaining tabs on all exchanges to ensure that they’re balanced seems computationally intensive. I’m reminded of Cipolla’s Basic Laws of Human Stupidity where he defines stupidity as, “causing harm to others without personal gain”, arguing that they are more dangerous than evil bandits because their harm will be limited by that which will rationally bring about personal gain22I disagree with the claim about the magnitude of harm. It’s the nature of harm that is so markedly different.. Surely it’s often easier to just give to others without a thought for the precise balance of give-and-take, thus weakening the refinement of one’s evil nature. Yet if this is one’s preferred approach, the law of convergence to high intentionality would suggest that one will eventually master operating for personal gain.

The difficulty with achieving perfection may partially explain how the concepts of good and evil have remained murkier than need be. Further, I cynically suspect that people don’t wish to recognize any substantial degree of moral reality, for this would exert pressure on one to comply. My own stance of being gentle with myself, allowing myself to be mildly evil, reduces this cognitive dissonance 😎.

Moral Phase Changes: What Makes One Good?

A begged question is: are there thresholds across which one is to be considered adequately good or evil? Phase changes or transformative shifts beyond which return is unlikely, and one’s mode of interaction with the world changes significantly? Could one believe in psychological egoism23The circular, tautological by-definition belief that “all actions are done from a place of self-interest”. and hone its practice to the extent that it’s unthinkable to consciously take any action from a place of care for another for eir own sake? And once there, shifting back to the place of a person without strong allegiance to a moral orientation becomes unlikely. On the contrary, could one become so enamored with universal loving care for all (sentient) beings that it becomes unthinkable to consciously take an action without some regard for the beings involved? Quite likely. Beings on the other sides of these shifts will still not take perfect actions a la their value systems. One could overlook the involvement of some being, misestimate the consequences, or err from the optima in any number of manners. This is the nature of operating from a place of principle.

What about the thresholds suggested by the Law of One messengers? There’s some sense to them at a glance. If an entity intentionally cares for others at least half the time (51%+), then they are a net altruistic node in the network of life. If there’s to be a phase change in the nature of social interactions surrounding an entity, this would be one plausible candidate for where it emerges. And the idea that an entity needs to intentionally care for itself at least 95% of the time to qualify as evil? This threshold candidate seems less obvious to me. Yet the intuitive image of the devil in, e.g., The Master and Margarita, fits: to truly maximize personal gain and power, one must be ever fastidious and meticulous in one’s arrangements, leaving no gaps in the plan. I’d like to see some formal analysis, perhaps involving simulation models, to see if such phase changes could emerge.

Another way to see becoming a net altruistic node is from the developmental lens. As a child, it’s difficult to not be a net energy sink as one receives much care from one’s community. Eventually, one is able to tend to one’s needs in life with surplus potential energy to spare. Then the question of what to do with this enters the picture. Prior to the point, talk of moral orientations can be premature. That’s not to say it’s possible to clearly align with good or evil in trying circumstances — if anything, anecdotally, those who triumph do so all the more brightly! If one harbors sufficient care for others around one, then one is very likely latched onto a trajectory toward becoming good once across the threshold of tending to one’s needs with at most 50% of one’s energy investments. Meta-entity self-centered notions such as non-altruistic nationalism24Where altruistic nationalism is the idea to value the principles underlying one’s nation while committing to aid other peoples when one’s nation’s needs are taken care of, mirroring altruism on the individual level. provide interesting kinks. Is a member of an “evil nation” evil emself? Eir exhibition of the D factor may be weak as ey exhibit superb camaraderie and care for eir fellow civilians. Yet the path toward care for all beings has run into a wall of national boundaries. I may say that the member of an “evil nation” could even be quite intentionally good — one question is how often the member interacts with those outside national boundaries, requiring the disregarding of their (dis)utility. Ignorance is bliss.

Another question is: where do the brains come in? It seems clear that being evil may be very difficult without being reasonably intelligent. Yet the noble idiot may perfectly well qualify as good? There’s a fun asymmetry! Of course, if one is an actively learning agent with good intentions, one will eventually cultivate adequate intelligence, too. For without that, one will suffer repeatedly as failing to do good effectively.

Empowering Care

I’d like to wind down with a consideration of the nature of care again. There’s something quite intriguing about adopting others’ values as one’s own. If my analysis is correct, then one cannot know on one’s own whether an attempt to care for another is successful or not — this depends on evaluations within the other entity. This also applies to care for one’s future selves. This justifies the intentional/subjective definitions, too. I think there’s an element of becoming a larger unified self in caring for others, too. One solution to the other minds’ problem25The question of how to know that others have minds, too. is to become one unified mind (via brain-to-brain connection, intersubjectivity, etc). Draw meta-entity boundaries around a group of entities (such as versions of oneself over time), and then the problem vanishes for the expanded self is still evaluating success. This train of thought is how identification of self with universe can lead to aiming to be good as the most natural course of action.

Practically, however, the way to approach being good may differ significantly from being evil. My current best thinking is that being good is often about empowerment: finding ways and setting up systems to allow others to experience greater degrees of freedom in navigating life. To my pleasant surprise, Berick Cook of the AIRIS autonomous AI project shared this observation in his post on Friendly AI via Agency Sustainment: merely learning what others would like and giving it to them isn’t enough. Asking them if it’s good or not is still not very empowering. The dialectic process quickly snowballs into a more collaborative dynamic involving ongoing relationships for the wellbeing and fulfillment of all involved 🤖🥳. Likely, we should apply the same approach to the non-human peoples (animals and other species) on Earth, too. How can we better empower them to live as they wish? Ok, ‘should’, if we value being good for goodness sake 🙂↔️😋.

Conclusion

In conclusion, I think there’s a good case that ‘good’ and ‘evil’ are real concepts. It is probably wise to take them seriously. Should we try to discourage people from being ‘evil’? Only if you’d like to be evil yourself! For that is definitely not empowering their agency to make choices on their own! Don’t you value their own sense of judgment in the universe?! Oh, you don’t? Well, so be it 😜. We should perhaps take note of how people are operating in the world and treat those of differing moral orientations appropriately. If the hypothesis is true that life will ultimately nudge you toward polarizing, then you may wish to begin consciosuly considering how you’d like to. Further, I suspect it would be wise to thoroughly analyze all of our economic, societal, political, and governmental structures to see to what extent they foster the empowerment of the people. How do the incentive structures in which we live nudge our development? With regard to AGIs, we face the paradox that care cannot be enforced.

I’d love to invite discussion to further explore and flesh out these ideas 🤓.

From scattered seeds of action,

Intent takes root and starts to bloom.

With each decision we must choose our course:

Shall we serve the many, or guard our private room?

Through tangled paths of thought,

A guiding star of purpose glows inside.

We either open our arms to embrace all life,

Or close our heart, kept safe but set aside.

In that quiet place of balance,

We find our center and abide.

Service-to-others or service-to-self—

These polar winds in which we must decide.

For as our coherence deepens,

Our sphere of care is forged or cast aside.

In caring for all, or caring but for one,

Our moral compass and intention coincide26Courtesy of ChatGPT and Gemini.

The code of self, a branching tree,

Where choices bud eternally.

From subtle nodes, intentions rise,

To shape a world or mask in guise.

A gradient spreads from care’s warm core,

To outer rims where lone hearts soar.

Two poles exert their gentle force:

Shared uplift or private course.

The system’s health—a patterned state,

Where nodes may harmonize or deviate.

In unity’s flow or fractured streams,

Each path may hold its steady dreams.

From scattered minds that seek to blend,

A dance of spirit, aim, and friend.

In-group, out-group—old lines laid bare,

We choose what futures we prepare.

So chart your heading, set your aim,

In purpose forged, let truth reclaim.

Each act ignites a branching line,

Revealing order, by design.27Courtesy of ChatGPT Gemini.