Alan Gewirth’s Proof for the Principle of Generic Consistency

Every prospective, purposive agent (PPA) has a claim right to its freedom and well-being (FWB)Archive of Formal Proofs

David Fuenmayor and Christoph Benzmüller1🌐 in an epic historical first have formalized a normative ethical theorem in Isabelle/HOL: Alan Gewirth’s Proof for the Principle of Generic Consistency. The Argument is roughly as follows2Paraphrased directly from their paper.:

- I act for some purpose E, that is, I am a purposeful agent.

- E is subjectively good.

- My freedom is a necessary condition for my agency, to attain any purpose.

- Freedom is a necessary good.

- I have a claim right to my freedom.

- Every purposeful agent has a claim right to its freedom.

Fuenmayor and Benzmüller found versions of the axioms regarding subjective goods, purpose, freedom and wellbeing, claim rights, etc., in such a manner that the proof works in Isabelle/HOL. An additional cool aspect is that they try to take the state-of-the-art analytic philosophy approaches to modeling perspectival judgments and formalize them. This is one of the weaknesses of working in SUMO that I discussed in the second worklog: how to model situations. To this end, they use a dyadic deontic logic with conditional obligations and a modal system for “dialectic necessity” based on “Kaplan’s logical framework LD”. It seems generally acknowledged that to do “real-world reasoning“3Commonly called “commonsense reasoning”., one will need to work with multiple modal systems interleaved together, which becomes quite subtle. Benzmuller is a big proponent of showing that Shallow Semantic Embeddings can work in HOL as a universal meta-logical reasoning system, i.e., defining the domain-specific logics in HOL. Ben Goertzel et al. have also long been proponents of this approach4See Meta-MeTTa: an operational semantics for MeTTa for their recent programming language to this end.

What’s the significance of this?

If you disagree with Gewirth’s theorem, then there should a particular property of the definitions or logical set-up that you disagree with.

Perhaps the perspectival modeling via Kaplan’s logical framework doesn’t capture the semantics properly? One meaning postulate I consider suspiciously strong is “explicationGoodness3”, of which they write, “The third axiom, however, has been added by us as an implicit premise in order to render Gewirth’s proof as correct. This axiom aims at representing the intuitive notion of “seeking the good”.” In essence, they tried to find the minimal axiom needed to allow the proof to hold. explicationGoodness3 states that, “In all possible contexts, for all purposes P and agents A, if P is possible, then P is obligatory if P is necessarily good for A.” Are all necessary goods obligatory to fulfill? This certainly seems debatable. Picking apart precisely what the statement means already requires careful study. There is an open invitation for someone to find a more soundly convincing set of premises for the proof 😉.

The main point is that now the debate about whether this normative ethical theorem holds or not can be very precise and held at the level of definitions, logical frameworks, and how they map up with reality. Yay!

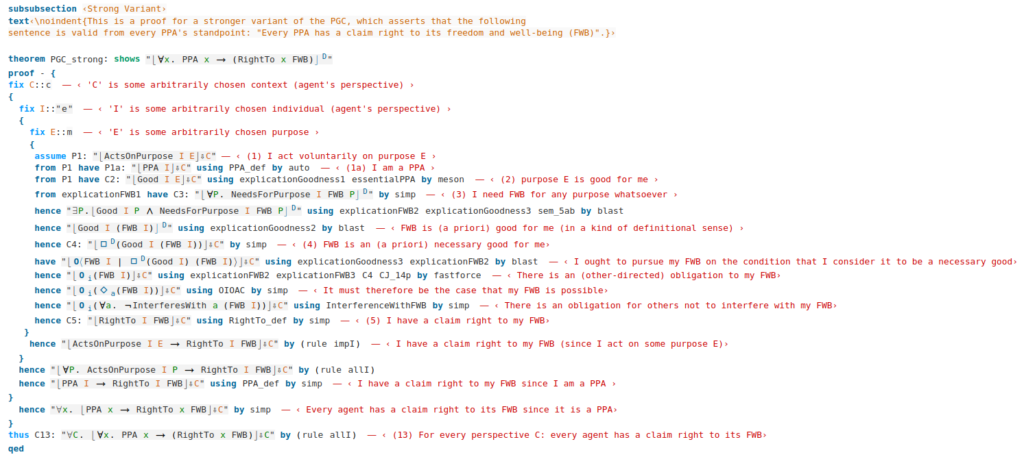

Below is a screenshot of the core proof:

SUMO

Below I sketch out what some of the definitions, axiomatizations, and lemmas may look like in SUMO. Gewirth’s theory is a deontological imperative theory that focuses on justifying one core claim right, which could also be framed as a prohibition on interfering with any agent’s freedom. I decided to drop the contextual and dyadic deontic logic operators below to simply show a glimpse of how these concepts would look in SUMO, which supports generic HOL expressions5I believe it would be fairly direct to translate the shallow semantic embeddings, too..

text‹{The type chosen to represent what Gewirth calls "purposes" is not essential for the argument's validity.

We choose to give "purposes" the same type as sentence meanings (type 'm'), so "acting on a purpose" would be

represented in an analogous way to having a certain propositional attitude (e.g. "desiring that some proposition obtains").}›

consts ActsOnPurpose:: "e⇒m⇒m" ― ‹ ActsOnPurpose(A,E) gives the meaning of the sentence "A is acting on purpose E" ›

consts NeedsForPurpose:: "e⇒p⇒m⇒m" ― ‹ NeedsForPurpose(A,P,E) gives the meaning of "A needs to have property P in order to reach purpose E" ›

text‹{In Gewirth's argument, an individual with agency (i.e.~capable of purposive action) is said to be a PPA (prospective purposive agent).}›

definition PPA:: "p" where "PPA a ≡ ∃E. ActsOnPurpose a E" ― ‹ Definition of PPA ›

(documentation PurposiveAgent EnglishLanguage "A purposive agent is an agent that has a purpose.")

(subclass PurposiveAgent AutonomousAgent)

(<=>

(instance ?AGENT PurposiveAgent)

(and

(instance ?AGENT AutonomousAgent)

(exists (?PROC ?PURP)

(and

(agent ?PROC ?AGENT)

(instance ?PROC AutonomousAgentProcess)

(hasPurposeForAgent ?PROC ?PURP ?AGENT)))))An agent is purposive if and only if there exists an instance of it taking a behavior that has a purpose for it.

The notion of good is mapped into SUMO as a SubjectiveAssessmentAttribute. I defined both this attribute and a standalone binary predicate goodForAgent.

;; consts Good::"e⇒m⇒m"

(documentation goodForAgent EnglishLanguage "Expresses that the proposition expressed by

?FORMULA is good for ?AGENT.")

(domain goodForAgent 1 AutonomousAgent)

(domain goodForAgent 2 ?FORMULA)

(instance goodForAgent BinaryPredicate)

(documentation Good EnglishLanguage "Good denotes that some entity is good

in some manner: it is desired, approved of, has the requisite qualities,

provides benefits, etc.")

(instance Good SubjectiveAssessmentAttribute)

(<=>

(goodForAgent ?AGENT ?PURP)

(subjectiveAttribute ?PURP Good ?AGENT))

;; axiomatization where explicationGoodness1: "⌊∀a P. ActsOnPurpose a P → Good a P⌋"

(=>

(and

(instance ?AGENT PurposiveAgent)

(instance ?PROC AutonomousAgentProcess)

(agent ?PROC ?AGENT)

(hasPurposeForAgent ?PROC ?PURP ?AGENT))

(goodForAgent ?AGENT ?PURP))The first axiom of goodness says that: when an agent acts on a purpose, this purpose is good for the agent6I don’t see a good way to denote that a purpose has been acted on. The best I have found is to say that there exists an instance of the action, which is implicit here..

The second axiom says that: when a purpose is good for an agent and the agent needs some property for the purpose, then it’s good for the agent to possess this property.

;; axiomatization where explicationGoodness2:

;; "⌊∀P M a. Good a P ∧ NeedsForPurpose a M P → Good a (M a)⌋"

(=>

(and

(goodForAgent ?AGENT ?PURP)

(needsForPurpose ?AGENT ?PROP ?PURP))

(goodForAgent ?AGENT (attribute ?AGENT ?PROP)))

(documentation needsForPurpose EnglishLanguage "?AGENT needs to have

property ?PROP for purpose ?PURP. (Taken from Benzmüller and Fuenmayor's

formalization of Gewirth's Principle of Generic Consistency.)")

(domain needsForPurpose 1 AutonomousAgent)

(domain needsForPurpose 2 Attribute)

(domain needsForPurpose 3 Formula)

(instance needsForPurpose TernaryPredicate)

;; axiomatization where explicationGoodness3: "⌊∀φ a. ◇φ → O⟨φ | □ Good a φ⟩⌋"

(=>

(modalAttribute ?PURP Possibility)

(modalAttribute

(=>

(modalAttribute

(goodForAgent ?AGENT ?PURP) Necessity)

(exists (?IPROC)

(and

(realizesFormula ?IPROC ?PURP)

(agent ?IPROC ?AGENT)))) Obligation))The third axiom states that: from an agent’s perspective, if a purpose is possible, then there is an obligation to attain this purpose if it is contextually necessarily good for the agent7In this dyadic deontic logic, there can only be an obligation to do that which is possible.. The form in SUMO is stronger due to dropping the contextuality — the form in Isabelle/HOL is the weakest version they found that allowed the proof to work!

Next is Freedom8I don’t see anywhere the “wellbeing” aspect of FWB comes in to the proof, so I dropped it., an attribute which can apply to agents. Freedom is needed for all purposes of all agents. Furthermore, for all agents, it is both possible for the agent to be free and for the agent to be unfree (i.e., it is a non-trivial attribute).

;; consts FWB::"p" ― ‹ Enjoying freedom and well-being (FWB) is a property (i.e.~has type @{text "e⇒m"}) ›

(documentation Freedom EnglishLanguage "Freedom (and well-being) is a

property necessary for being capable of purposeful action.")

(instance Freedom Attribute)

;; axiomatization where explicationFWB1: "⌊∀P a. NeedsForPurpose a FWB P⌋"

(needsForPurpose ?AGENT Freedom ?PURP)

;; axiomatization where explicationFWB2: "⌊∀a. ◇ FWB a⌋"

(=>

(instance ?AGENT AutonomousAgent)

(modalAttribute (attribute ?AGENT Freedom) Possibility))

;; axiomatization where explicationFWB3: "⌊∀a. ◇ ¬FWB a⌋"

(=>

(instance ?AGENT AutonomousAgent)

(modalAttribute (not (attribute ?AGENT Freedom)) Possibility))

The definition of having a right is not well-defined in SUMO9At present, holdsRight only appears in the consequent of rules.. A (Hohfeldian) claim right to a property P entairls an “other-directed” obligation on all agents to not interfere with the right of an agent to possess property P.

;; definition RightTo::"e⇒(e⇒m)⇒m"

;; where "RightTo a φ ≡ O(∀b. ¬InterferesWith b (φ a))"

(=>

(holdsRight ?FORM ?AGENT1)

(modalAttribute

(forall (?AGENT2)

(not

(inhibits ?AGENT2

(KappaFn ?PROC

(and

(realizesFormula ?PROC FORM)

(agent ?PROC ?AGENT1)))))) Obligation))Finally, the main theorem that every purposive agent has a claim right to its freedom.

;; theorem PGC_strong: shows "⌊∀x. PPA x → (RightTo x FWB)⌋" (=> (instance ?AGENT PurposiveAgent) (holdsRight (attribute ?AGENT Freedom) ?AGENT))