Research Shorts: ATP for HLAI and Can XGBoost Learn Subsumption?

It may come as a surprise to you that some interesting, successful research projects wind up unpublished because they don’t go far enough to qualify for presentation at a conference or acceptance to a journal. Published results are a lossy approximation to the sum total of human innovation and exploration: sometimes results may be emphasized and at other times they may be underwhelmingly reported. Not to mention researchers outside academia have less incentive to publish work (let alone military and security researchers or ingenious black hackers).

The topic of the paper this post is based on was “Semantic Embeddings for Machine Learning of Saturation-Style Reasoning.” The IJCAI conference had a special session on “Understanding Intelligence and Human-level AI in the New Machine Learning era”. The work we do is neuro-symbolic, integrating learning and classical AI, learning and reasoning, so we figured writing flowery prose and giving the submission a shot! It’d be a fun audience 😉.

A lot of the poetic rambling made it into the EnigmaWatch I post. Hey, benefits can accrue even from ‘failed’ submissions! The reviewer feedback was fairly reasonable, too.

Mathematics and Human-Level AI

Apparently, logic has generally been intended as a formalization of human reasoning. Especially for the analysis of composable systems. Once the human reasoning has been expressed in a logical language, correct reasoning can be verified and clearly communicated to others. One tends to assert that all well-specified problems can be framed in the language of mathematics, thus AI for general mathematical problems is necessarily AI for general problem-solving. And does this not include any task humans do that we care about?

The body of mathematics is a zoo of quintessential reasoning approaches and diverse concepts. Math aspires to abstract redundant concepts out of the picture and to cover all interesting phenomena, which is interesting aside from the fact that we’re in the era of big data where subtle differences across many samples (such as cat photos) assist a machine learning algorithm in developing good, ‘general’ models. Fortunately, our trusty theorem provers can develop many alternative proofs for the same theorems at least? 😋

The flip side is that automated theorem proving (ATP) cannot be generally solved without attaining near general AI. Somehow getting most human mathematics might be possible via an AlphaLogicus system, however.

I would love to see the following two facets of general AI systems receive more attention in the ATP field:

1. Semantic Memory allows past experience to help an AI to prove a new problem.

Everything in a programming language is technically syntactic, that is, symbolic. The precise definition of semantics is often debated. Early 2020 Zar posits that semantics is about mappings between domains. Can we map “apple” to real-world apples (or to images of apples in our mind’s eyes)?

One way to think about this in terms of theorem proving is in terms of the relationship between the features that the AI model will see and the broader context in which the theorem is being proven.

This means that using clause (\(\varphi_C\)) and goal conjecture (\(\varphi_G\)) features together sets the stage for more semantic learning than just using clause (\(\varphi_C\)) features. What the clause “really means” can be more understood with the conjecture than on its own.

Including a proof-vector (\(\varphi_\pi\)) adds more contextual information that could encode the relation of a clause to other mathematical proofs. Given that words in natural languages are often defined in terms of other words and ‘meaning’ is ‘semantics’, ENIGMAWatch has even more semantic memory and learning than ENIGMA or ProofWatch alone.

The graph neural network guidance for E can load 500 processed clauses into the network as the “proof state context”, which probably allows for even greater semantic learning.

Why not give E access to any possibly beneficial information so that it can learn literally anything about the meaning of a clause in a proof search of a conjecture in a mathematical theory in a planetary civilization of mostly generally intelligent bipedal mammals in the Milky Way in this very Universe?

— Sounds good. Yet then the learner may be overwhelmed and struggle to make as much sense as with the clause features alone (\(\varphi_C\)). What’s the phrase? “Make everything as simple as possible, but not simpler”. Maybe we should just work on premise selection, filtering the knowledge base for relevant information, and stick to the full knowledge setting? That’s an interesting proposition.

2. Meta-learning – learning to learn. Usually in machine learning we humans learn how to better apply the learning algorithm or even develop new ones. For general learning, ideally, the learning algorithm will learn how to modify and adapt itself to be more effective. In mathematics, meta-learning seems related to concept learning and conjecturing new theorems. These are like new features that can help to do what the AI can already do more simply or to further explore the unknown mathematical realms.

The ATP itself should be formalized in such a manner that the ATP can prove theorems about itself. And the ATP should be able to enact such alterations.

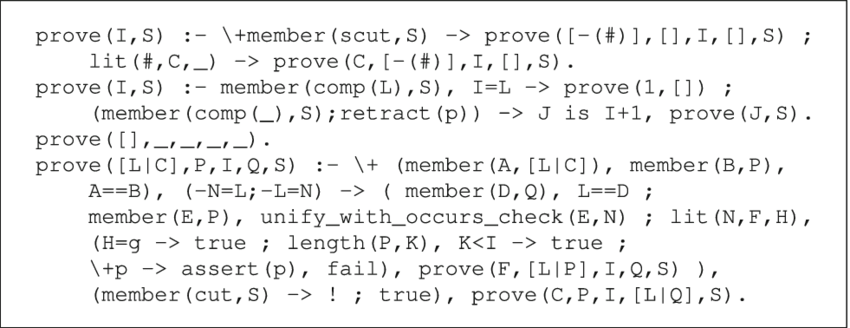

As to the formalization, a simple Tableaux based “proof search algorithm” was formalized in Coq in an Intro to Computational Logic textbook I read and recommend. leanCoP is a very concise theorem prover developed by Jens Otten and should technically be amenable to formalization. Below is the core code of leanCoP 2.0:

Cezary implemented leanCoP in HOL Light so that its proofs can be certified. I’m unaware of implementations of larger theorem provers such as Vampire or E within an interactive theorem proving library.

The general schema of a meta-learning agent that proves modifications of itself that will be better by its current performance measure is laid out by the great and glorious Jürgen Schmidhuber as the Gödel machine. Schmidhuber also is the co-author of one of the better accessible descriptions of meta-learning I’ve found. Thus one would want to use leanCoP to prove that an improvement of leanCoP will perform better (over relevant domains) — Oh, one needs the AI to conjecture the improvement on its own. One wants to close the loop in the Ouroboros fashion: not only does the theorem prover learn how to prove theorems better, but the theorem prover also learns how to make itself into a better learner of how to prove theorems and proves that these upgrades are actually upgrades.

At present, we humans are part of this General Intelligence loop of the theorem provers. A necessary part.

The goal of meta-learning is to identify what AI researchers and developers do and to automate these, to pass the baton on to computers and their programs.

One approach is to work in a language suited to reasoning about programs, proofs, logics, and type systems so that the AI can formulate questions such as, “what type system is good for developing programs to identify super cute and funny cat pictures?” Jonathan Warrell, Alexey Potapov, Adam Vandervorst, and Ben Goertzel are developing the MeTTa language to serve this purpose.

XGBoost for Subsumption

This project was after the mini ENIGMAWatch before Jan’s multi-index subsumption scheme using top-level predicate symbols as codes was developed. The idea was to cluster the clauses so that an XGBoost model would assign a cluster to each clause and subsumption for the watchlist would only be checked for clauses in the cluster.

To this end, we took a bit over one million clauses from Mizar40 and fed this to Josef’s MoMM tool to check for subsumption.

The first test with this subsumption matrix was to see if XGBoost and LIBLINEAR can tell when one clause subsumes another clause. This should work because subsumption is symbolic as are the ENIGMA features. LIBLINEAR only had \(77\%\) accuracy. XGBoost achieved \(98\%\) accuracy.

The first experiment with 1000 clusters (of at most 15,000 clauses) used a \(20\%\) test set and achieved \(85\%\) accuracy at assigning clauses to the appropriate cluster (which doesn’t say how many subsumed clauses are in the cluster nor if all of them are in it). As is, XGBoost would need a tree for each class K, which is too slow.

I had a lot of fun trying hacky hierarchical clustering approaches: first splitting clauses into \(k \in [2-5]\) clusters and then recursively splitting each cluster into \(k\) subclusters. Repeating the process until the clusters are small enough. Then XGBoost with small models of 100 trees predicts each \(k\)-split with at least \(96.7\%\) accuracy. This speeds the estimated evaluation time up from 200 milliseconds per clause to 16 milliseconds per clause.

The code-based method of Jan’s satisfies the desiderata of this clustering in a simple, algorithmic way and seems to be a superior approach.

One idea that could be explored is to skip the subsumption step and to just use the output of the ML model directly. If XGBoost thinks this clause ‘subsumes’ a clause in this proof, then maybe it’s a good idea even if it’s not an exact fit, so we could try to prioritize it and take note in the proof-vector. So we’d find ways to group clauses by proof or region in a mathematical space and then to train models to measure relationships between clauses and these groups. This is an abstraction of the semantic approach behind ProofWatch and might still be interesting.

An additional note is that the fact that XGBoost does a reasonable job at predicting subsumption suggests the features may not be so bad at providing salient information to the machine learning models 😉.

If you’re curious, I’ve uploaded the draft.