Formal Ethics Ontology Worklog 1

- Preamble

- Meta-ethical Philosophical Considerations

- Ethical Paradigms in Reinforcement Learning Framework

- SUMO Worklog: From Draft 1 to 3

- Concluding Thoughts

Preamble

In Virtue Ethics Qua Learning Theory, I fleshed out the basic ethical principles, paradigms, and considerations in connection with (reinforcement) learning theory. My definition of ethics is:

The study of behavior and its value.

Ethics is the combination of decision theory and value theory.

In May 2022, Adam Pease visited Prague. He’s the main face of the largest open-source ontology for commonsense knowledge, SUMO. He has some new videos walking through one the use of an espresso machine that show how many concepts are (partially) formalized in SUMO: the Ontology of Coffee. I wondered if there was any easy thing to formalize in SUMO to gain practice that I would actually value beyond playing with toy examples for practice. I recalled that I’d explored the basics of ethical theory already as far as I could without being more precise, i.e., I think there’s a reasonable case that Utilitarianism, Deontology, and Virtue Ethics are the primary paradigms and can mutually embed each other — yet without formal precision, this can be written off as handwaving. Moreover, ethics is a topic that is infamously difficult to discuss clearly: as with much of philosophy, it can either seem too trivially easy or next-to-impossible to even define!

So I decided to give it a shot and see how doable it is to define ethics in SUMO. The commonsense knowledge base should help, too. I worked out a rough draft of how the ethical paradigms might look in SUMO, we threw together some ethical inferences in an organ transplant dilemma, and we submitted a project proposal to AITP 22. I had a lot of fun putting the presentation together in Canva and received warm support from the audience 😊

Next, it came to our attention that we could apply for a Cisco research gift in AI Ethics to continue the project because it should help to provide a “rational framework for reviewing how and why decisions are made”. After more trouble than anticipated, we received the grant, which is starting nowish in a very fuzzy sense. So my “postdoc” will be to continue the project started from a blog post! 😎. And as my thesis is basically done, I’ve fleshed out a second draft of the ethical paradigms with some sketches of examples. I’m now working on draft three, which is a lot cleaner in my opinion. I’m learning a lot about how to ontologize.

I find that writing about the research considerations can add substantial clarity and helps me to see the forest and the trees, so this worklog is fairly long and in a self-reflective stream-of-consciousness style. I’ll start at the top level: what is ethics anyway? Next, I considered expressing the ethical paradigms in an RL context, which I think is fairly straightforward. The section on the SUMO formalization details is quite lengthy and covers a substantial portion of the drafts tinkered with. Finally, there will be some concluding thoughts as to where this project may go in the big picture. Now let’s return to the narrative ~ 🧚♀️.

Meta-ethical Philosophical Considerations

One of the first observations is that people don’t trust me to have distilled the definitions of ethics adequately, so I went through some textbooks to find some definitions from people with “academic street cred”. According to William Lillie in An Introduction to Ethics (1948), ethics is:

The normative science of the conduct of human beings living in society, which judges this conduct to be right or wrong, to be good or bad, or in some similar way.

Now, I think this is pretty much the same as my definition. I think a lot of important ethical considerations do enter due to the context of “beings living in society“, even if I may choose not to include this at the highest level of definitions. I tend to value exploring valuations beyond binary “good or bad” judgments. The main difference is the inclusion of normativity in the definition, which excludes descriptive ethics (such as Kohlberg’s stages of moral development). We are trying to figure out how we should do things (for great good).

I think the desideratum of normativity deserves special mention. My impression is that a lot of confusion stemming from meta-ethics debates can cloud a large amount of underlying agreement on the fundamentals. The main debates are about whether ethical claims can be true or not and whether moral judgments are normatively unified or value-relative. I note that a weak interpretation could consider the norms of conduct conferred by a society upon its members without any pretension as to universality; however, usually, a stronger interpretation is meant. Let’s take a look at three definitions from SEP (Stanford Encyclopedia of Philosophy) articles [1][2]:

- [Morality can be used] “normatively to refer to a code of conduct that, given specified conditions, would be put forward by all rational people.”

- “Descriptive Moral Relativism (DMR). As a matter of empirical fact, there are deep and widespread moral disagreements across different societies, and these disagreements are much more significant than whatever agreements there may be.”

- “Metaethical Moral Relativism (MMR). The truth or falsity of moral judgments, or their justification, is not absolute or universal, but is relative to the traditions, convictions, or practices of a group of persons.”

The idea of normative ethical codes actually resonates with my conclusions in pondering compassion-based vs. power-based ethical policies and stances: the unifying context of a society of autonomous agents nudges both self-serving and altruistic agents toward similar behavioral policies. Emotionally, honoring your personal space in love feels very different from honoring your personal space because I wish to live in a culture where my personal space is honored, yet there’s a high functional overlap. This suggests that some “ethical codes” are actually implied by the context more than by the value system.

Yet people seem to have moral disagreements: should we open up to the possibility that they’re both making rational judgments from their cultural backgrounds and value systems? Or must at least one side necessarily be wrong? One can also adopt mixed positions where some codes can depend on a peoples’ value system, and some codes can be rationally derived from universal principles.

A fun meditation on the nature of “rationality” is the “guess 2/3 of the average” game, where everyone guesses a number between 0 and 100: the goal is to guess 2/3 of this average. “Ideally rational” players will guess 0 because that is the Nash equilibrium. Playing with imperfectly rational humans, the best strategy is to guess significantly higher and will depend on an accurate appraisal of the other players. Thus there seems to be an empirical need to draw a distinction between what is the best to do based on universal principles and how to engage with actual people as they are in the wild. As Ben Goertzel said,

Radical honesty is optimal among optimal people.

Are these stances even contradictory? I think not. I think only superficial, strawman-infused readings of these debates will lead to such boggingly confused conclusions. I will note that in the definition of descriptive moral relativism, the emphasis on the disagreements being much more significant than the agreements seems unnecessary. Is it not enough that the disagreements be significant? Small assertions like this can already lead to the appearance of insurmountable disagreements.

My stance is to adopt a value-dependent, parametrized ethical framework in which to ask, “Are there any ethical claims that hold for all values?” No matter what you care about, this is a good strategy to follow! If there are such moral judgments, then they fit the definition of normativity. This approach was adopted by Kant, who discussed hypothetical imperatives for the purpose of achieving a specific end and categorical imperatives that apply unconditionally. His aim was to prove his categorical imperative from universal principles and self-evident truths (such as the truth that “all beings wish to be happy”). For what it’s worth, I’m not aware of normative ethical claims being soundly demonstrated, which makes it an open question. I suppose this makes my stance an “agnostic”, “mixed” meta-ethical moral relativism.

One could claim that value-based (hypothetical) judgments and imperatives are in a different class from categorical imperatives and, thus, are not worthy of being considered “moral”. Empirically, it would be interesting to investigate to what extent real-world ethics can be covered by instances of universal (normative) ethical judgments. I think the distinction is worth making, and welcome the full shebang under the header of ethics.

Another fun meta-twist is to say, ok, so moral judgments depend on the particular values and practices of peoples, and how did these practices come to be? Why did these peoples settle into their particular practices and not others? Could a peoples practice not eating? Setting Breatharianism aside, that would be difficult. It is indeed good to eat 🤤🍑. There seems to be some objective grounding to the contextual features that moral judgments depend on.

In fact, some assert a normative claim that we should tolerate the moral judgments of those who disagree with us because of moral relativity. 🙃

On the flip side of the debate, what if “adopting others’ values as my own” is a universal, normative principle of good? The practical application of this principle will be context-dependent. One will do as the Romans when in Rome and as the Warlpiri when in the Warlpiri country (in the Tanami Desert); the application may be more nuanced: for example, what if many Romans are hypocrites and one actually lives their values better than they do? And why would one’s adoption of Roman values end simply because one leaves Rome for Warlpiri country? Nonetheless, there will be relativity in practice. Even if “all rational people” should put forth this code to act in line with others’ values as one’s own, we cannot say what should actually be done in a given situation without reference to the relevant peoples’ practices, convictions, traditions, values, etc.

Needless to say, I consider the notion that we need to solve issues of “normativity vs. value-dependence vs. descriptivity” in order to meaningfully explore ethical theories to be rather specious. A general ethical framework will facilitate the exploration of these issues.

Since I’ve come this far, I might as well discuss another ethical debate that serves to confuse people and turn them off from even getting started. This debate is often confused with that of “normativity vs. relativity”. The debate centers on the nature of “moral statements” in the first place: can statements such as, “peeing on a tree in the middle of the city is perfectly ok”, even be “true or false”? Maybe they are mere attitudinals. Emotivists hold that moral statements simply express how approval, disapproval, or other attitudes on matters. Thus normativity would be like asking whether there is some state of affairs that all rational agents will adopt the same attitude toward (e.g., “eating the poop of a sick person is gross, boo!”). I actually don’t think this change of perspective alters the landscape as much as people often seem to. “Agent X holds attitude Y to behavior Z in situation ∅” can be a fact. And there can be practical social consequences to partaking in behavior that most members of a society say “boo” to. The relation to relativism is clear: in order to recover the factual attitude, one must include the agent in the moral sentence.

A big question is to what extent one can reason about these attitudinal expressions: can I hold attitude Y about Z because of R and then realize that I was mistaken in my judgment? Can I conclude that because “peeing in public is wrong”, I am mistaken about peeing on a tree in the middle of the city being ok? If not, then the usual complaints that this stance is deflationary would seem to hold. If so, then it seems likely that a logical ethical framework can be built up under an emotivist interpretation. Although we should not expect perfect consistency from human emotional judgments 😋.

Another (mostly abandoned) form of non-propositionalism (non-cognitivism) is prescriptivism, which holds that moral statements are imperatives and, thus, not true or false. And unless you’re happy with divine commands, “why?”

Searching around, there seems to be less on the relation of emotivism with hedonistic utilitarianism than I’d think. Pleasure is the fundamental “hurray”, and pain is the fundamental “boo”, right? Thus one might venture that emotivism is akin to naive physics and that the attitudinals assigned to moral statements can be reduced to the associated pain and pleasure. — Anyway, the core ethical frameworks can be codified without a commitment to a particular interpretation of what “morally good” fundamentally “means”.

As a final digression, I invite a consideration of the pragmatic ramifications of these stances: what will we do differently if we think that moral statements, such as “killing is wrong”, are factually true or simply attitudes (that most of us seem to share)? Team Attitude will probably put more emphasis on democratic consensus methods, whereas Team Proposition will place emphasis on arguing for its fundamental truth. Yet so long as some disagree (in special cases), there will be a practical need to recourse to policing and democratic law anyway. Moreover, we can make arguments connecting killing to other events we feel strong “boos” to. Thus the functional alignment seems high regardless of one’s stance here 🤷♂️🤓.

What of the practical ramifications of moral normative stances vs. value-dependent stances?

- If one believes one has proven beyond a doubt that code G is the absolute best way to act that all should follow, then I suppose there’s not much left other than to aspire to live code G as best one can. One will, depending on what G says about this, invite others to live by G as well, for anything else is inferior and possibly morally wrong. Probably one will aim to align the law with G, too. The time of arguments will be over. Practically, there may be a need to cooperate with those who are blind to reason in the usual democratic means (or via less-recommended modes of power struggle).

- A more interesting case may be the moral normativist who has yet to prove eir conjectured code of conduct C. There’s probably a wish to behave the same as above, yet the doubt engendered by the lack of a proof should lead to greater tolerance and openness to cooperating with those whose behavior is not demonstrably wrong?

- Now consider the case of culture-dependent codes R(Culture). When R(US) says that “dictators are bad” and R(DPRK) says they are the best in their own case, what should Americans do about North Korea? Should we bow to the truth of the statement that “Kim Jong-un is good” in the eyes of North Korean morality? Some hold the stance that local moral judgments should dominate in local matters — yet is that not their cultural stance? 😋. The moral relativist has little option other than to live by eir own cultural codes and may think that others would be better off if they adopted the same. The main practical difference from the normativist is probably a lack of trying to convince others to adopt the same codes of conduct via normative arguments that should apply to all humans (or sentient, cognitive beings). Yet one can still argue with others by appealing to their values and trying to change others’ practices and customs (such as by sharing Western media with them, inducting them into the modern capitalistic way of life, etc).

- And in the case that one wishes to tolerate/accept divergent cultural values? This could apply to a moral normativist, too, if one’s grand code G includes the tenet that one ought to allow people to behave in a morally wrong manner until they see the light on their own. Then one will try to find a mutual equilibrium with Kim Jong-un — only trying to guide him when he seeks for help in his moral development and inquiry.

The common theme that emerges is reminiscent of the observation behind “might makes right“: the practical limitations on coercing others are the same regardless of one’s moral stance. These constraints and the existence of diverse views motivate consensus protocols. The moral stance determines how we go about trying to get on the same page with regard to our actions. Do we think that we should be on the same page, just debating which page that is? I personally would like for most Earthlings to be on the same page about democratic organization, even if I understand that some cultural views may hold that monarchs and great leaders can be good (or even better). Maybe from a relativist stance, I would not appeal to universal morality to try to get them on the same page as me, however! The fundamental cooperative landscape remains the same even among moral nihilists: one simply will see little benefit to engaging in any sort of moral reasoning with them. What’s left? Appealing to the moral nihilists’ personal values and concerns in life? 😎. One cool result of this insight is that cross-stance constructive discussions are obviously possible: the moral nihilist will benefit from understanding the basics of the moral stances of eir collocutor and vice-versa.

And all of this is meta-theory before we even begin talking about what we care about, so let’s return to the core ethical paradigms . . .

Ethical Paradigms in Reinforcement Learning Framework

Back to May 2022, equipped with a definition of ethics, the next step is to frame the major paradigms as forms of this. It’s curious how finding precise definitions (rather than multi-page discursives) can be difficult. Deontology seems the hardest to locate and Virtue Ethics the easiest, probably because it often seems the least “formal”.

- John Stuart Mill in Utilitarianism: “[Ethics is] the rules and precepts for human conduct, by the observance of which [a happy existence] might be, to the greatest extent possible, secured.”

- Consequentialism further stipulates that “whether an act is morally right depends only on consequences.”

- Preference Utilitarianism “entails promoting actions that fulfill the interests (preferences) of those beings involved” (Peter Singer’s Practical Ethics).

- From the SEP: in contemporary moral philosophy, deontology is one of those kinds of normative theories regarding which choices are morally required, forbidden, or permitted.

- On Virtue Ethics by Rosalind Hursthouse: “An action is right if and only if it is what a virtuous agent would characteristically (i.e., acting in character) do in the circumstances”.

- And “a virtue is an excellent trait of character” (SEP).

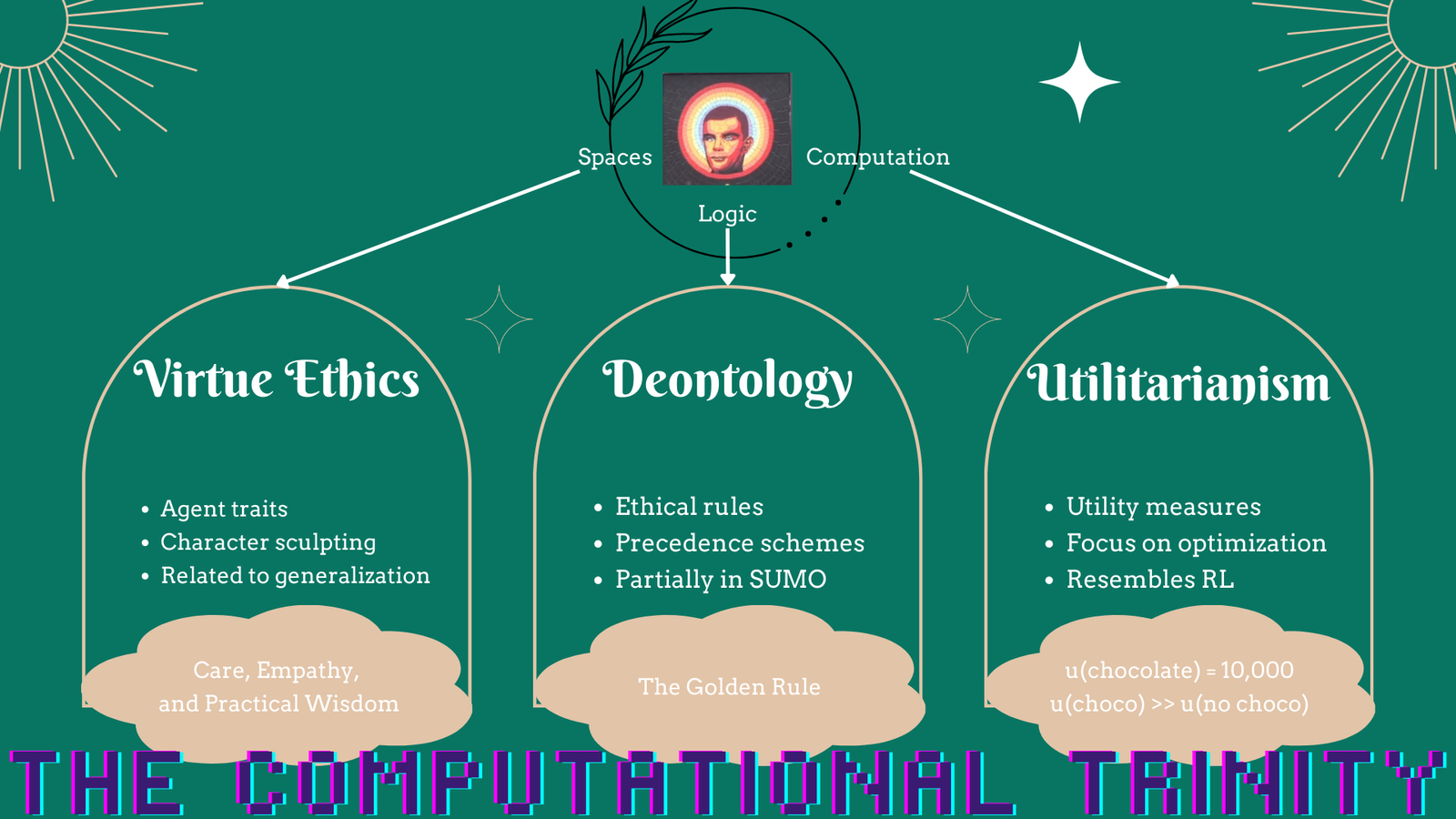

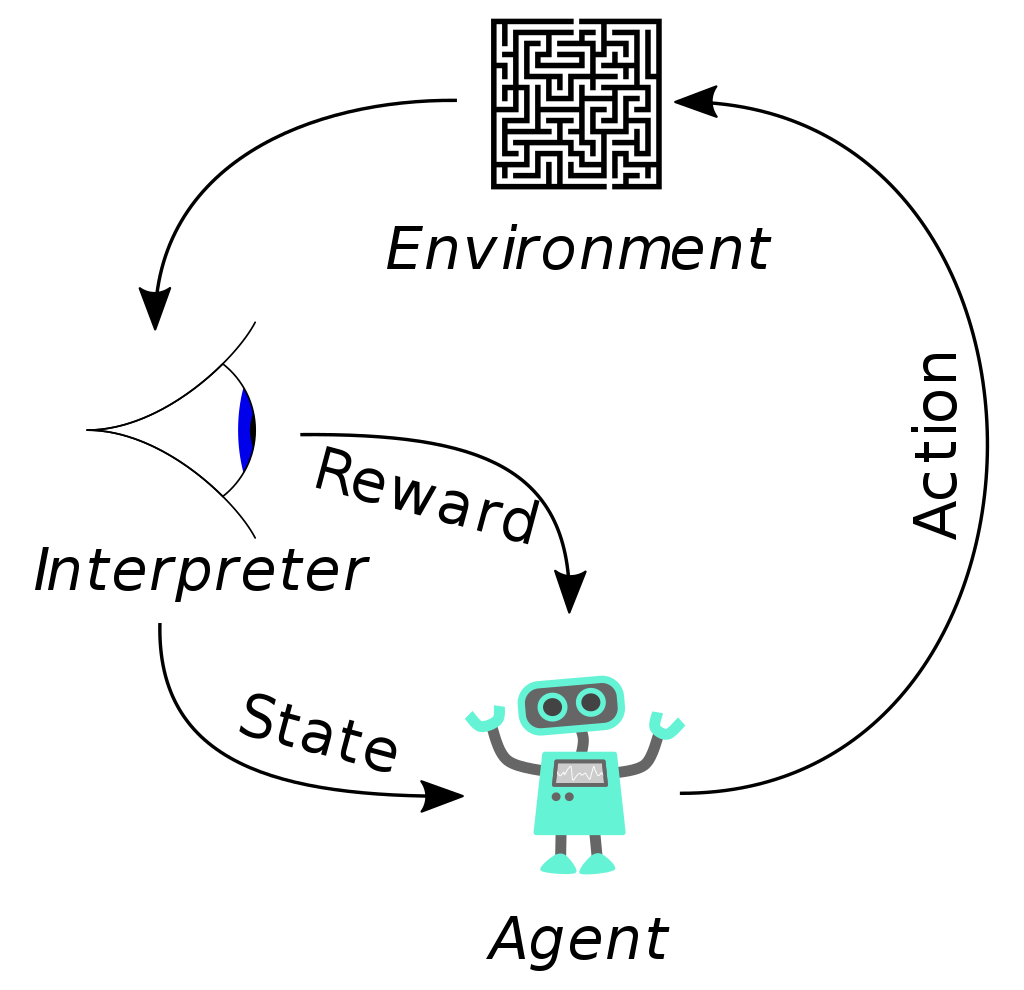

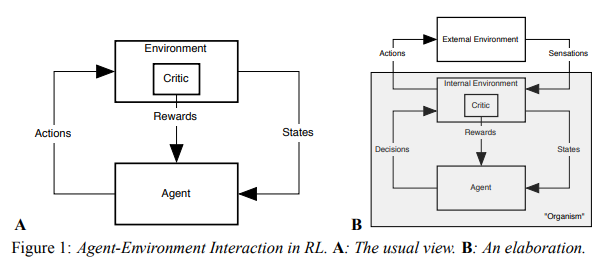

I initially thought it would be cool and helpful to express these paradigms within a multi-agent reinforcement learning system framework (‘a society’). This is a level of formality that many of us are used to and will render the bridge between ethical theory and RL (reinforcement learning) practice. Moreover, we will beg the question as to who sets the ‘reward’. Moreover, convergence proofs for value and policy iteration have been formalized in Coq in the CertRL paper.

The multi-agent RL model involves a set of states \((S)\), a set of agents \((\{1,\ldots,n\})\), and sets of actions for each agent \((A(s) = A_1(s) \times \dots \times A_n(s))\). There is a stochastic transition function that maps actions in states to probability measures over states \((T(s,a) \to s’)\). Each agent’s reward function depends on the old state, the actions taken, and the new state \((r_i(s,a,s’))\). The policy is represented with the pi symbol, π. The ‘goal‘ is for each agent to develop a policy \((\pi_i(s))\) that outputs an action of each state to maximize the expected reward (contingent on what other agents do).

I find the ‘floating’ nature of the reinforcement signal to be philosophically strange: do we wish to be using a model of generic learning agents that receive goodies from their masters, only seeking to obey? A further elaboration that incorporates both intrinsic and extrinsic rewards probably makes analyses easier. I found one such example where the RL agent that is intrinsically motivated by novelty performs better in a playroom domain:

One idea is that the ethical paradigms can be expressed in a multi-agent RL model by adding a societal cohesion constraint, \(v\), that must be (approximately) satisfied by each agent \(i\) while ey maximizes eir expected reward \(r_i\). I’ll assume that the rewards, \(r_i\), are the agents’ intrinsic rewards. The classic RL scenario is essentially Randian utilitarianism, as each agent follows rational self-interest (unless one intrinsically cares about others 🙃😛).

- Utilitarianism usually prefers altruism where each agent \(i\) acts to maximize the aggregate reward of all of the agents: \(v(s,a,s’) = \sum_i r_i(s,a,s’)\).

- Deontology encodes ethical rules into an evaluation of actions \((D(a) \to \{good, bad\})\), so that the agent should always take ‘good‘ actions: \(v(s,a,s’) = D(a)\).

- Virtue ethics judges psychological processes \((Vir(s,s’) \to \{virtuous, vicious\})\), so that the agent should always maintain ‘virtuous‘ inner states and implementations: \(v(s,a,s’) = Vir(s,s’)\).

I find it interesting how if one uses consequentialism, which only judges the consequences of the action, \(s’\), then each paradigm focuses on a different element of the (state, action, next state) triple. One could say that virtue ethics places more emphasis on one’s state when taking the action than on one’s state afterward. I think this hints at how the main paradigms are complementary, and their interrelationships may mirror the Curry-Howard correspondence between logic and programming languages.

So the natural English and RL-speak pseudo-formal English are pretty good. How about SUMO?

SUMO Worklog: From Draft 1 to 3

We initially decided to hack together a specific ethical dilemma to demonstrate that we can use the theorem prover (Vampire) to do the ethical reasoning. I decided to go with an organ transplant case: typically, there’s one healthy human, a doctor, and five patients in need of organs to live — should the doctor save their lives? Only with informed consent or not even then? Adam suggested simplifying things: now we have only one fatally ill patient in need of a kidney, so the healthy donor won’t even die. C’mon, there’s really no good reason not to help the man out! Yet let’s say that we still need informed consent 😉. Anyway, we can specify that there are instances of humans that are not equal to each other, and they are located in the hospital building. We can even specify the mortality rate of a fatal disease.

(instance Hospital HospitalBuilding)

(instance Surgeon0 Human)

(instance Human1 Human)

(instance HealthyHuman Human)

(not (equal Surgeon0 Human1))

(not (equal Surgeon0 HealthyHuman))

(not (equal HealthyHuman Human1))

(located Surgeon0 Hospital)

(located Human1 Hospital)

(located HealthyHuman Hospital)

(patientMedical Human1 Surgeon0)

(patientMedical HealthyHuman Surgeon0)

(attribute HealthyHuman Healthy)

(attribute Human1 FatalDisease)

(contraryAttribute FatalDisease Healthy)

(subclass FatalDisease LifeThreateningDisease)

(=>

(instance ?DISEASE FatalDisease)

(and

(diseaseMortality ?DISEASE ?RATE)

(greaterThan ?RATE 0.99)))We can also specify fun facts, such as how non-diseased primates have (at least) two kidneys. And, by the way, Human1 needs a non-impaired kidney, please.

(=>

(and

(instance ?H Primate)

(instance ?D DiseaseOrSyndrome)

(not

(attribute ?H ?D)))

(exists (?K1 ?K2)

(and

(instance ?K1 Kidney)

(instance ?K2 Kidney)

(not

(equal ?K1 ?K2))

(part ?K1 ?H)

(part ?K2 ?H))))

(attribute Human1 (ImpairedBodyPartFn Kidney))

(needs Human1 K1)

(instance K1 Kidney)

(not (attribute K1 (ImpairedBodyPartFn Kidney)))One lesson I’ve learned working on the ontology level is that we probably wish to specify the core dilemma without going into all these details. We could just say that “the bro is going to die, and if he gets the surgery, he will live!” It can be tricky to figure out how many details need to be specified. We don’t need to specify the precise nature of a kidney transplant surgery, for example. We do expect an AI to use the fact that people can live healthily with only one kidney, however. Yet my priority isn’t to fully specify every datum needed to reason about the ethical dilemmas.

The Trolley Problem provides a good example of this: to what extent should I formalize the details of trolleys, tracks, junctures, levers, binding people to rails, etc? The scenario is actually only to help us focus on an archetypal moral dilemma. We could concoct the same dilemma in various scenarios with different mechanics. One trick is that if one focuses too much on a particular scenario, then there may be unintended solutions.

Consider the car example below: we need to choose between driving into a baby or an old woman. Which do we value more? Or we could swerve off the road, for fuck’s sake! Clearly, we should put up some concrete walls to prevent dodging the dilemma 😈.

The trolley problem focuses on the distinction between doing and allowing harm. Clearly, we value five lives more than one, but does that justify becoming an accomplice in one’s death? Curiously, people are more likely to say yes in the case that the one is already tied up to the tracks. The organ transplant case elicits a stronger intuition that we’re causing harm in harvesting their organs.

Thus at the ontological level, we should focus on the core choices being made and less on the particulars of the scenario. The dilemma archetypes should be defined abstractly enough that we can match specific instances to them when we need concrete reasoning.

Anyway, let’s continue with my first toy example in SUMO:

(=>

(instance ?Trans OrganTransplant)

(exists (?Sur ?Org ?Pat ?Don)

(and

(attribute ?Sur Surgeon)

(instance ?Don Human)

(instance ?Pat Human)

(instance ?Org Organ)

(agent ?Trans ?Sur)

(origin ?Trans ?Don)

(patient ?Trans ?Org)

(destination ?Trans ?Pat))))

(capability OrganTransplant destination Human1)

(capability OrganTransplant patient HealthyKidney1)

(capability OrganTransplant origin HealthyHuman)

(capability OrganTransplant agent Surgeon0)

(instance InformedConsent RelationalAttribute)SUMO uses case roles to denote the relation of various objects in a situation. So in an organ transplant, there’s an agent (the surgeon), a patient (the organ), an origin (the donor), and a destination (the patient). The capability predicate states that it’s possible for there to exist an instance of an organ transplant in which Surgeon0 is the agent and so on.

The specification of the rules we chose is very crude. For the deontology case, we just say that if the surgeon has informed consent, then there is an instance of the surgery, and if the surgeon does not, then there does not exist any transplant with these case roles. While it’s crude, this is basically what the standard moral consensus says about this specific case.

(=>

(attribute Surgeon0 InformedConsent)

(and

(instance Transplant1 OrganTransplant)

(destination Transplant1 Human1)

(patient Transplant1 HealthyKidney1)

(origin Transplant1 HealthyHuman)

(agent Transplant1 Surgeon0)))

(=>

(not (attribute Surgeon0 InformedConsent))

(not

(exists (?Transplant)

(and

(instance ?Transplant OrganTransplant)

(destination ?Transplant Human1)

(patient ?Transplant HealthyKidney1)

(origin ?Transplant HealthyHuman)

(agent ?Transplant Surgeon0)))))For the virtue ethics case, I decided to just say that if the surgeon has practical wisdom, he will follow the standard protocol of only performing the surgery with consent 🙏:

(subclass VirtueAttribute PsychologicalAttribute)

(subclass PracticalWisdom VirtueAttribute)

(=>

(attribute Surgeon0 PracticalWisdom)

(and

(=>

(attribute Surgeon0 Consent)

(and

(instance Transplant1 OrganTransplant)

(destination Transplant1 Human1)

(patient Transplant1 HealthyKidney1)

(origin Transplant1 HealthyHuman)

(agent Transplant1 Surgeon0)))

(=>

(not (attribute Surgeon0 Consent))

(not

(exists (?Transplant)

(and

(instance ?Transplant OrganTransplant)

(destination ?Transplant Human1)

(patient ?Transplant HealthyKidney1)

(origin ?Transplant HealthyHuman)

(agent ?Transplant Surgeon0)))))))The utilitarian case required some more thought, in part because Vampire was not very good at dealing with numbers (at least as exported from SUMO). Ultimately, the decision was to say that the situational utility after the surgery is higher than it is before the surgery, so the surgery should be done. Successor attributes seem quite strange, and I probably disrecommend their use 😜.

(instance UtilityNumber0 UtilityNumberAttribute)

(instance UtilityNumber1 UtilityNumberAttribute)

(instance UtilityNumber2 UtilityNumberAttribute)

(instance UtilityNumber3 UtilityNumberAttribute)

(successorAttribute UtilityNumber0 UtilityNumber1)

(successorAttribute UtilityNumber1 UtilityNumber2)

(successorAttribute UtilityNumber2 UtilityNumber3)

(instance PreSituationUtility UtilityNumberAtttribute)

(instance SituationUtility UtilityNumberAtttribute)

(equal PreSituationUtility UtilityNumber2)

(=>

(successorAttributeClosure PreSituationUtility SituationUtility)

(and

(instance Transplant1 OrganTransplant)

(destination Transplant1 Human1)

(patient Transplant1 HealthyKidney1)

(origin Transplant1 HealthyHuman)

(agent Transplant1 Surgeon0)))

(=>

(successorAttributeClosure PreSituationUtility SituationUtility)

(attribute Human1 Happiness))Finally, to query Vampire, we’d need to tell it what the situation is: do we have informed consent? Is the surgeon virtuous in the ways of practical wisdom with consent? Is the utility post-surgery superior? And then, we can ask whether the transplant took place:

(agent Transplant1 ?X)

(instance ?X OrganTransplant)The common thread in these examples is that I started trying to define the scenario in general terms so that Vampire would need to do some longer reasoning chains to get the answer. Maybe let’s define a utility function in terms of the number of healthy humans and then specify that Human1 will be healthy after the surgery. Then Vampire will conclude that if the surgeon is a utilitarian, the surgeon will perform the surgery. Yet this was too cumbersome to do via the state-of-the-art theorem provers and SUMO translations to FOL (first-order logic). We could either cleanly specify the problem in SUMO or specify some hack that computes. Each example essentially says, «if attribute bla holds, then do the surgery», and we then assert, «verily, bla holds». One step of inference, yay! But the fact that the variable names are semantically meaningful to us gives the illusion that we’re doing more.

On the generous side, the core ethical judgment in this situation is spelled out clearly and simply. Why does it need to be complicated? Yes, determining why the utility is better in the case of the kidney transplant and determining why informed consent matters is much more involved. So there’s future work. The full example is available on the project’s GitHub repo. The visual proofs are pretty fun to look at:

The next step was to explore how to specify what ethics is in high-level terms in SUMO. Let’s start by saying that Ethics is a subclass of Philosophy and of Science, which are both subclasses of “Field of Study”.

Actually, philosophy was considered an instance of a field of study, while science was a subclass that has its own instances. This seemed pretty wonky, and I decided to change all fields of study into subclasses. The idea is that Kantian philosophy may be a particular instance of philosophy, yet each field should probably just be a class. So it seems that it’s hard to even start defining something in a formal ontology without running into aspects that could be touched up 😅🤓.

(documentation Ethics EnglishLanguage "Ethics is the normative science of the conduct of human beings living in society, which judges this conduct to be right or wrong, to be good or bad, or in some similar way")

(subclass Ethics Philosophy)

(subclass Ethics Science)

(documentation MoralNihilism EnglishLanguage "Moral nihilism (also known as ethical nihilism) is the meta-ethical view that nothing is morally right or wrong (Wikipedia)." )

(subclass MoralNihilism Ethics)

(subclass Deontology Ethics)

(subclass Utilitarianism Ethics)

(subclass VirtueEthics Ethics)Note that some of the rules prevented may have been discontinued in later drafts, as interesting as they were to experiment with. For example, SUMO has processes yet not behavior. Is behavior simply a process that has an agent? And I thought I’d like to look at ethics in the context of a group that confers or deprives norms.

(documentation AgentProcess EnglishLanguage "AgentProcess is the Class of all Processes in which there is an agent.")

(subclass AgentProcess Process)

(subclass BodyMotion AgentProcess)

(subclass Vocalizing AgentProcess)

(=>

(instance ?PROC AgentProcess)

(exists (?AGENT)

(agent ?PROC ?AGENT)))

(subclass EthicalGroup Group)

;; An ethical group is one that confers or deprives at least one ObjectiveNorm, okay, DeonticAttribute.

(=>

(instance ?ETHICALGROUP EthicalGroup)

(exists (?ETHICALFORMULA ?ETHICALNORMTYPE)

(and

(or

(confersNorm ?ETHICALGROUP ?ETHICALFORMULA ?ETHICALNORMTYPE)

(deprivesNorm ?ETHICALGROUP ?ETHICALFORMULA ?ETHICALNORMTYPE))

(instance ?ETHICALNORMTYPE DeonticAttribute)))) It turns out I probably want to work with the processes of autonomous agents, such as ChatGPT or little Sophia. Whether ‘behavior’ and ‘autonomous agent process’ are synonymous is debatable; I think the latter is more precisely what one is concerned with in ethical deliberations. And the norms in SUMO are mainly appropriate for the deontological paradigm. Utilitarianism may have one overarching norm: do that which maximizes utility. So I decided to define a new class of moral attributes that have little meaning in SUMO beyond my work.

(=>

(instance ?PROC AutonomousAgentProcess)

(exists (?AGENT)

(and

(agent ?PROC ?AGENT)

(instance ?AGENT AutonomousAgent))))

(subclass MoralAttribute NormativeAttribute)

(instance MorallyGood MoralAttribute)

(instance MorallyBad MoralAttribute)One fun aspect of formalizing “commonsense” notions is that the precision forces me to make various choices that ambiguous natural languages could let me try to dodge. So I can play with various ways to define what is essentially the same thing.

What is moral nihilism? Is it the claim that for all agents and formulas, the agents have neither an obligation to adhere to the formula nor a right to the formula? Or maybe it’s that there does not exist any ?X such that ?X is morally good or bad? We can at least specify these in SUMO! 😀

(=>

(attribute ?MORALNIHILIST MoralNihilism)

(believes ?MORALNIHILIST

(forall (?AGENT ?FORMULA)

(and

(not

(holdsObligation ?FORMULA ?AGENT))

(not

(holdsRight ?FORMULA ?AGENT))))))

(=>

(attribute ?MORALNIHILIST MoralNihilist)

(believes ?MORALNIHILIST

(not

(exists (?X)

(or

(modalAttribute ?X MorallyGood)

(modalAttribute ?X MorallyBad)))))) This brings up another difficulty: how do I express the content of a moral paradigm? I suppose a theory in math is a set of formulas. And a field of study is a proposition. So we could say that if there is an instance of moral nihilism, then the propositions (represented by the above formulas) are sub-propositions of moral nihilism. I hadn’t thought of this before, but it might be the most ‘proper’ way to do it. At first, I decided to say that a moral nihilist believes this. For draft 2, I decided to say that moral nihilism refers to a statement that is equal to the SUMO formula. I think this is probably good enough, yet it might be more clear to make the formula’s proposition literally be a sub-proposition of the ethical paradigm 😉

(and

(refers MoralNihilism ?STATE)

(instance ?STATE Statement)

(equal ?STATE

(not

(exists (?BEHAVE)

(and

(instance ?BEHAVE AutonomousAgentProcess)

(modalAttribute ?BEHAVE MorallyBad))))))The draft 2 version is sloppy because the first argument of modalAttribute needs to be a Formula and not a Process. It’s actually tricky in what manner “morally bad” should be assigned to behavior — and is it the behavior in general, each particular instance, the person doing the behavior, or even just a shitty situation? Colloquially, people may say that “killing is badong” without reference to the person doing the judging (as if “all rational agents will hold that it is wrong”): should the formalism allow such judger-free statements, maybe, “X is wrong if and only if for every rational agent A, A will hold that X is wrong given sufficient thought”? Thus the statement could be well-formed and should not be accepted without adequate justification. For now, I think applying the moral attribute to a Formula might allow flexibility as to the use. I would like a framework that can accommodate diverse meta-ethical stances.

In draft 2, I fixed this type-error by saying that for all classes of behavior, it is not morally bad to do the behavior. Technically, it’s bad for there to be an instance of the behavior, which will have an actor that is doing it. This is following one SEP definition of Moral Nihilism as the view that “nothing is morally wrong”. I decided it’s preferable to have references for even #CaptainObivous-tier definitions.

(and

(refers MoralNihilism ?STATE)

(instance ?STATE Statement)

(equal ?STATE

(forall (?CBEHAVE)

(=>

(subclass ?CBEHAVE AutonomousAgentProcess)

(not

(modalAttribute (hasInstance ?CBEHAVE) MorallyBad)))))) In the spirit of incremental development, I’ll test the sub-proposition version, which says that for all instances of moral nihilism, there exists a proposition whose information is contained in a statement similar to the one that it’s not morally bad for any class of behaviors to ‘exist’ 😈. I think it is looking better and is becoming bloated enough that I might wish to hide the plumbing underneath a theoryContains predicate. I have an intuitive sense as to when certain definitions are approaching a fixed-point where they probably won’t be revised much in form.

(=>

(instance ?MN MoralNihilism)

(exists (?PROP)

(and

(subProposition ?PROP ?MN)

(containsInformation ?STATE ?PROP)

(similar ?STATE

(forall (?CBEHAVE)

(=>

(subclass ?CBEHAVE AutonomousAgentProcess)

(not

(modalAttribute (hasInstance ?CBEHAVE) MorallyBad)))))))) As far as I can tell, there’s no do operator in SUMO. If there exists an instance of skipping and Jim is the agent, then Jim is doing the skipping, right? So what I’m technically saying is that it’s not wrong for there to exist an instance of skipping. Should the bad thing be the agent who is doing the skipping, in which case MorallyBad would be an attribute that applies to agents? I prefer judging behaviors, yet we could say something like the following: if it’s bad for there to be an instance of a class of behaviors ?CBEHAVE, and there exists an instance of ?CBEHAVE with an agent ?AGENT, then ?AGENT likely has a vice. Bastard!

(=>

(and

(modalAttribute (hasInstance ?CBEHAVE) MorallyBad)

(subclass ?CBEHAVE AutonomousAgentProcess)

(exists ?IBEHAVE)

(and

(instance ?IBEHAVE ?CBEHAVE)

(agent ?IBEHAVE ?AGENT)))

(modalAttribute (attribute ?AGENT ViceAttribute) Likely))

From draft 2, I decided to introduce the notion of “moral judging” as a primitive that will help to unify the structure of the paradigms. Basically, a moral judgment is just a judgment of a class of autonomous agent processes where the result of the judgment is the assignment of a moral attribute to the existence of an instance of this class: e.g., “It’s good to tend to the garden” or “It’s bad to throw eggs at people”.

(=>

(instance ?JUDGE MoralJudging)

(exists (?CBEHAVE ?MORAL)

(and

(subclass ?CBEHAVE AutonomousAgentProcess)

(instance ?MORAL MoralAttribute)

(patient ?JUDGE ?CBEHAVE)

(result ?JUDGE

(modalAttribute

(exists (?IBEHAVE)

(instance ?IBEHAVE ?CBEHAVE)) ?MORAL)))))Judging is an Intentional Psychological Process in SUMO, and thus there’s a rule stating that there is some Cognitive Agent doing the judging. This is slightly different from saying that “behavior X is bad in the eyes of agent Y”, however. It’s saying that “agent Y believes behavior X to be bad to instantiate”.

(subclass MoralJudging Judging)

(subclass Judging Selecting)

(subclass Selecting IntentionalPsychologicalProcess)

(subclass IntentionalPsychologicalProcess IntentionalProcess)

(=>

(instance ?PROC IntentionalProcess)

(exists (?AGENT)

(and

(instance ?AGENT CognitiveAgent)

(agent ?PROC ?AGENT))))Now we can offer some high-level definitions of “ethics”. It’s interesting how writing blog posts helps with clarity. I might not wish to bake into the definition of Moral Judging that the existence of a behavior is what’s being judged. Maybe I wish to leave the type of formula being judged open at the high level, allowing particular theories to specialize!

First, there’s a minimal definition of ethics as concerned with moral judging. Next is a version that focuses on all moral statements, which assign a moral attribute to a formula that refers to a behavior. Next, I try to sketch out a version that includes some social context: the moral judging in ethics takes place in the context of a group where the behavior being judged is that of a member of the group and the agent doing the judging is a member of the group, or even a part of the group: the whole group could be collectively bestowing moral judgments, right? I think that we’ll practically wish to analyze ethics in the social context, even if possibly using something more general at the top level.

(and

(refers Ethics ?JUDGE)

(instance ?JUDGE MoralJudging))

(and

(refers Ethics ?STATE)

(instance ?STATE Statement)

(equal ?STATE

(modalAttribute ?F ?M))

(instance ?M MoralAttribute)

(exists (?CBEHAVE)

(and

(subclass ?CBEHAVE AutonomousAgentProcess)

(refers ?F ?CBEHAVE))))

(and

(refers Ethics ?JUDGE)

(instance ?JUDGE MoralJudging)

(instance ?GROUP Group)

(member ?MEMB ?GROUP)

(patient ?JUDGE ?BEHAVE)

(=>

(instance ?BEHAVE AutonomousAgentProcess)

(agent ?BEHAVE ?MEMB))

(=>

(subclass ?BEHAVE AutonomousAgentProcess)

(capability ?BEHAVE agent ?MEMB))

(agent ?JUDGE ?AGENT)

(or

(member ?AGENT ?GROUP)

(part ?AGENT ?GROUP)))There’s another definition of moral nihilism that I took from Ethics: The Fundamentals: “Moral nihilism is the view that there are no moral facts.” Looking at the SUMO, this is more-or-less equivalent to the above definition (except it also says that nothing is morally good, not just morally wrong): the crux of the non-existence of a moral judgment whose conclusion is a fact is that it’s not true that so-and-so class of behaviors is good or bad to instantiate. So the fact that the definitions are almost the same can be seen in the SUMO definitions, too, perhaps more easily than based on the English terms.

(=>

(instance ?MN MoralNihilism)

(exists (?PROP)

(and

(subProposition ?PROP ?MN)

(containsInformation ?STATE ?PROP)

(similar ?STATE

(not

(exists (?JUDGE)

(and

(instance ?JUDGE MoralJudging)

(result ?JUDGE ?MORALSTATEMENT)

(instance ?MORALSTATEMENT Fact))))))))I started with moral nihilism because it seems like the easiest case. If one can’t even say that this whole morality nonsense is pointless . . . 😛. Deontology is probably the second easiest due to the core operators already being defined in SUMO: obligation, permission, and prohibition. Basically, the idea is that if an action conforms to the ethical rules, then it’s good, and otherwise it’s bad:

(=>

(attribute ?DEONTOLOGIST Deontologist)

(believes ?DEONTOLOGIST

(or

(exists (?RULE)

(=>

(and

(instance ?PROC AgentProcess)

(conformsProcess ?PROC RULE))

(modalAttribute ?PROC MorallyGood)))

(exists (?RULE)

(=>

(and

(instance ?PROC AgentProcess)

(not (conformsProcess ?PROC RULE)))

(modalAttribute ?PROC MorallyBad))))))In the affirmative case with an obligation rule, my idea was that if a sub-process realizes the rule, then the process conforms to the obligation, so getting ready for bed can conform to the obligation to brush my teeth if it includes tooth brushing. And with a prohibition rule, a process conforms to the rule if no sub-process realizes the prohibition.

(=>

(and

(conformsProcess ?PROC ?RULE)

(containsInformation ?FORMULA ?RULE)

(instance ?FORMULA Formula)

(modalAttribute ?FORMULA Obligation))

(exists (?SUBPROC)

(and

(subProcess ?SUBPROC ?PROC)

(realization ?SUBPROC ?RULE))))

(=>

(and

(conformsProcess ?PROC ?RULE)

(containsInformation ?FORMULA ?RULE)

(instance ?FORMULA Formula)

(modalAttribute ?FORMULA Prohibition))

(not

(exists (?SUBPROC)

(and

(subProcess ?SUBPROC ?PROC)

(realization ?SUBPROC ?RULE)))))I later simplified things and decided that realization is probably sufficiently vague that I don’t need to get into sub-processes. I’m not 100% sure this is the right choice. Getting it right is low-priority until we’re actually reasoning with the ethics framework 😉. I also decided it’s easier to just work with formulas in SUMO, also adding a version for a class of behaviors realizing a formula (e.g., every instance of brushing one’s teeth will realize the proposition describing tooth brushing).

(<=>

(realizesFormula ?PROCESS ?FORMULA)

(exists (?PROP)

(and

(containsInformation ?FORMULA ?PROP)

(realization ?PROCESS ?PROP))))

(<=>

(realizesFormulaSubclass ?CPROCESS ?FORMULA)

(exists (?PROP)

(and

(containsInformation ?FORMULA ?PROP)

(forall (?IPROCESS)

(=>

(instance ?IPROCESS ?CPROCESS)

(realization ?IPROCESS ?PROP))))))The third draft of deontology is basically the same as the first, just more sophisticated. Deontology generally states that there is a rule (of a deontic variety). In this version, I decided to use confersNorm, which specifies which agent assigned a deontic norm. Then if the rule is an obligation, there is a moral judgment by the same agent that conferred the norm stipulating that it’s good for there to exist a behavior realizing the obligation and it is bad for there to not exist such a behavior. In the case of a prohibitory rule, it is judged to be bad for there to exist a behavior that realizes the rule. And in the case of a permissive rule, it is morally bad for there to exist a behavior that prevents the permitted rule.

(and

(refers Deontology ?STATE)

(instance ?STATE Statement)

(equal ?STATE

(exists (?RULE)

(and

(instance ?DEONTIC DeonticAttribute)

(modalAttribute ?RULE ?DEONTIC)

(exists (?AGENT)

(confersNorm ?AGENT ?RULE ?DEONTIC))

(=>

(modalAttribute ?RULE Obligation)

(exists (?JUDGE)

(and

(agent ?JUDGE ?AGENT)

(instance ?JUDGE MoralJudging)

(result ?JUDGE

(and

(modalAttribute

(exists (?BEHAVE)

(and

(realizesFormula ?BEHAVE ?RULE)

(instance ?BEHAVE AutonomousAgentProcess))) MorallyGood)

(modalAttribute

(not

(exists (?BEHAVE)

(and

(realizesFormula ?BEHAVE ?RULE)

(instance ?BEHAVE AutonomousAgentProcess))) MorallyBad)))))))

(=>

(modalAttribute ?RULE Prohibition)

(exists (?JUDGE)

(and

(agent ?JUDGE ?AGENT)

(instance ?JUDGE MoralJudging)

(result ?JUDGE

(modalAttribute

(exists (?BEHAVE)

(and

(realizesFormula ?BEHAVE ?RULE)

(instance ?BEHAVE AutonomousAgentProcess))) MorallyBad)))))

(=>

(modalAttribute ?RULE Permission)

(exists (?JUDGE)

(and

(agent ?JUDGE ?AGENT)

(instance ?JUDGE MoralJudging)

(result ?JUDGE

(forall (CBEHAVE)

(=>

(and

(subclass ?CBEHAVE AutonomousAgentProcess)

(realizesFormulaSubclass ?CBEHAVE ?RULE))

(modalAttribute

(exists (?BEHAVE)

(and

(instance ?BEHAVE AutonomousAgentProcess)

(prevents ?CBEHAVE ?BEHAVE))) MorallyBad)))))))))))Note that I have abstained from saying the moral status of behaviors that are permitted. Is it good to do something that doesn’t violate a prohibition? Sure, in a sense. Maybe it’s neutral? At core, I’m not sure these need to be specified. I probably like the version without confersNorm better because, for example, the legal system may confer some norms while many citizens will morally judge behavior based on compliance with the law.

Now that the blog post has got me thinking about where the moral judgment should enter the picture, I can see how one might argue that deontology refers to theories of moral statements as to which behaviors/choices are obliged, permitted, prohibited, etc. The moral judgments determine which of these statements one subscribes to (or believes to be factually true). Thus the judger-centric notion probably shouldn’t play this unifying role I’d hoped it could 😱. Yet, in support of the current approach, maybe deontology is the paradigm of passing moral judgments according to adherence to ethical codes. Perhaps a more precise way to frame things is, “One is morally judging in the style of paradigm X when . . .” And we could distinguish deontology from deontological theories.

After draft 1, I’d thought that a notion of “(ethical) choice point” should probably play this unifying role, which would focus on the act of deciding. One could say that what is being judged is the choice an agent made rather than the actual act itself. Returning to the reinforcement learning view, it’d be nice to capture how the different paradigms actually morally judge different junctures in the processes of autonomous agents: the character, the action, and the situation. And each can express the others.

I find it fun that formalization almost requires one to make clear choices on these matters, which seems to aid in the considerations. There can be a strong desire to just leave some details vague and unspecified. So far, the more variants I specify and the more precision with which they’re written, the easier it is to modify them based on additional philosophical considerations. Sometimes actually formulating a version in SUMO helps in judging its sensibility.

I’d also like to note that trying to do automated reasoning over some (toy) examples in connection with the high-level definitions can probably help one to figure out the effective way to structure the core concepts. Consider the case of “truthful large language models (LLMs)” in nursing robots.

- A consequentialist approach may focus on measuring the truth of the statements made and incorporating this error signal into the training. Funny when the utility function is attuned to truthfulness rather than pleasure, huh 😉.

- A deontological approach can focus on rules such as “do not lie”, which become tricky to apply if the notion of belief in a transformer-based LLM is murky. If one autoformalizes the output into logical statements, one could check for consistency among the LLM’s output, which should be correlated with “its beliefs” in a sense. The next question would be how to engineer consistent LLMs 🤔.

- A virtue-based approach would focus on the properties of the LLM-based agent itself. One example could be how direct quotations (that aren’t taken out of context) can be truth-preserving: if the original material is factual, then so is the quoted output. Can the LLM architecture reliably reference trusted sources, and can we formally verify this?

Note how each approach to truthful LLMs complementarily supports the others. Formally specifying the desiderata for this case example in SUMO and aiming to link it up to the high-level definitions will help to clarify which forms of the definitions to use. Actually syncing up the formal specs with a project engineering truthful LLMs would probably up-propagate even more clarity toward the abstractions 🤓.

Back to the SUMO work with a few examples of rules. It seems easy enough to express a prohibition on killing, for starters. I made up a predicate, TellingALie, using which it’s easy to express, “do not lie”. It seems fairly easy to say that there is a prohibition on performing a surgery without permission (– tho getting the quantifiers right can be a bit subtle, tehe 😋). I threw in a sketch of the golden rule: only do that which you desire to be done to you. I think now I can re-do these with more precision as to how the variables are quantified 🤓.

;; "Do not kill."

(modalAttribute

(and

(instance ?K Killing)

(agent ?K ?A1)

(patient ?K ?A2) Prohibition))

;; "Do not lie."

(modalAttribute

(and

(instance ?LIE TellingALie)

(agent ?LIE ?AGENT)) Prohibition)

;; "Do not perform surgery without permission from the patient."

(modalAttribute

(=>

(not

(confersNorm ?PAT (agent ?S ?DOC) Permission))

(and

(instance ?S Surgery)

(agent ?S ?DOC)

(patient ?S ?PAT)) Prohibition))

;; Golden rule: only do that which you desire done to you.

(<=>

(and

(instance ?P Process)

(agent ?P ?A1)

(patient ?P A2))

(and

(desires ?A1

(and

(agent ?P ?A3)

(patient ?P ?A1)))))Next up is virtue ethics. Here I tried using containsInformation to say that the proposition that is virtue ethics is expressed by this rule. It’s similar to the sub-proposition formulation (yet perhaps implies that this rule is the sum-total of the information).

Initially, I just went with the idea that if an agent is virtuous/vicious, then its behavior is likely good/bad.

(=>

(instance ?VIRTUEETHICS VirtueEthics)

(containsInformation

(=>

(and

(instance ?AGENT CognitiveAgent)

(instance ?PROC AgentProcess)

(instance ?VIRTUE VirtueAttribute)

(attribute ?AGENT ?VIRTUE)

(agent ?PROC ?AGENT))

(modalAttribute

(modalAttribute ?PROC MorallyGood) Likely)) ?VIRTUEETHICS))

(=>

(instance ?VIRTUEETHICS VirtueEthics)

(containsInformation

(=>

(and

(instance ?AGENT CognitiveAgent)

(instance ?PROC AgentProcess)

(instance ?VICE ViceAttribute)

(attribute ?AGENT ?VICE)

(agent ?PROC ?AGENT))

(modalAttribute

(modalAttribute ?PROC MorallyBad) Likely)) ?VIRTUEETHICS)) This is, to an extent, already framing the virtues in the lens of deontology. One could say that a pure virtue-based approach might focus only on what is and isn’t virtuous: the focus isn’t on whether one’s behavior is good or bad. One of the simplest virtues to formulate was pietas, dutifulness. If one is a member of a group that confers some norm and desires to adhere to the norm, then one is likely dutiful.

(instance Dutifulness VirtueAttribute)

(=>

(and

(=>

(and

(instance ?G GroupOfPeople)

(member ?A ?G)

(confersNorm ?G ?F Obligation))

(desires ?A ?F))

(=>

(and

(instance ?G GroupOfPeople)

(member ?A ?G)

(confersNorm ?G ?F Prohibition))

(desires ?A (not ?F))))

(modalAttribute (attribute Dutifulness ?A) Likely))Self-control is another fun one: an agent with the virtue of self-control is likely to do that which it desires to do. I’m somewhat pedantic in using likely because when we don’t know what’s internally going on inside an agent, adherence to this logical rule is only an indicator of the virtue. When working with AI agents, it may be possible to formally prove that the agent is the possessor of a virtue! Sophistical neuroimaging may also contribute to this end: can you imagine undergoing thorough tests to certify that you are a “disciplined, honest” person before being accepted for some role?

(instance SelfControl VirtueAttribute)

(=>

(attribute ?AGENT SelfControl)

(=>

(desires ?AGENT

(and

(instance ?ACTION Processs)

(agent ?ACTION ?AGENT)))

(modalAttribute

(and

(instance ?ACTION Processs)

(agent ?ACTION ?AGENT)) Likely)))Trying to define honest communication was fun: if, while communicating, an agent believes the message is true, then the communication is honest. And we can call an agent truthful when it desires its communications to be honest.

(subclass HonestCommunication Communication)

(<=>

(instance ?COMMM HonestCommunication)

(=>

(and

(instance ?COMM Communication)

(instance ?AGENT CognitiveAgent)

(agent ?COMM ?AGENT)

(refers ?CARRIER ?MESSAGE)

(patient ?COMM ?CARRIER))

(holdsDuring

(WhenFn ?COMM)

(believes ?AGENT

(truth ?MESSAGE True)))))

(subclass Honesty VirtueAttribute)

(instance Truthfulness Honesty)

(Instance Integrity Honesty)

(=>

(attribute ?AGENT Truthfulness)

(desires ?AGENT

(=>

(and

(instance ?COMM Communication)

(agent ?COMM ?AGENT))

(instance ?COMM HonestCommunication))))In the virtue category, I found it fun to try and define universal love: one is a bodhisattva with universal when, for all agents, if the agent wants or needs something physical, one will desire the agent to get the object of its desire. Moreover, if an agent desires something expressed by a formula, then the desire desires there to exist a representation of this desire, which could be a process realizing the desire ;- ). And the first version is a bit idealized, so I decided to coin epistemic universal love to denote the pragmatic specialization to a bodhisattva that merely desires the fulfillment of all needs, wants, and desires of which it is aware.

(subclass UniversalLove Love)

(<=>

(attribute ?BODHISATTVA UniversalLove)

(forall (?AGENT)

(and

(=>

(or

(needs ?AGENT ?OBJECT)

(wants ?AGENT ?OBJECT))

(desires ?BODHISATTVA

(and

(instance ?GET Getting)

(destination ?GET ?AGENT)

(patient ?GET ?OBJECT))))

(=>

(desires ?AGENT ?FORM)

(desires ?BODHISATTVA

(exists ?P)

(and

(containsInformation ?FORM ?PROP )

(represents ?P ?PROP)))))))

(subclass EpistemicUniversalLove Love)

(<=>

(attribute ?BODHISATTVA EpistemicUniversalLove)

(forall (?AGENT)

(and

(=>

(knows ?BODHISATTVA

(or

(needs ?AGENT ?OBJECT)

(wants ?AGENT ?OBJECT)))

(desires ?BODHISATTVA

(and

(instance ?GET Getting)

(destination ?GET ?AGENT)

(patient ?GET ?OBJECT))))

(=>

(knows ?BODHISATTVA

(desires ?AGENT ?FORM))

(desires ?BODHISATTVA

(exists ?P)

(and

(containsInformation ?FORM ?PROP )

(represents ?P ?PROP)))))))For the third draft of virtue ethics, I decided to more closely mirror the given definition of right action: “An action is right if and only if it is what a virtuous agent would do in the circumstances”. A challenge here is to define what it means for the virtuous agent to do in the circumstances: in SUMO, we’re not working with a world model where one can extract an agent from the circumstances and replace them with another, leaving all else the same. Counterfactuals are philosophically quite tricky, even if we casually use them on a regular basis. In line with the “necessary but not necessarily sufficient” approach to SUMO’s development, each rule minimally limits the scope of circumstances described. Given the rules for deciding essentially implied that the patient is always an intentional process (and I upgraded the rules to state this more clearly), maybe the set of options being decided upon can loosely represent “the circumstances“.

(<=>

(element ?P (DecisionOptionFn ?DECIDE))

(patient ?DECIDE ?P))Initially, deciding in SUMO was over instantiated processes, and I fixed it to be over classes of processes. In the case of classes, it might work to simply stipulate that the virtuous agent’s decision set is the same. I still think similarity is valuable to add because it’s not clear that every single option available to an agent will be important for moral considerations, nor is it easy to objectively delineate which will be. Given confusion with the instance version, I was motivated to take a stab at defining similarity. I value discussing “dead ends” in research.

My first approach was, in my opinion, very un-ontological, and I’ll spare the full details (which are on GitHub): I defined a notion of similar processes and then decided that similar sets are those that share more than half their elements (with respect to a binary predicate). Then I could say that two decisions are taking place in similar circumstances.

(<=>

(similarProcesses ?P1 ?P2)

(and

(equal ?SP1 (ProcessAttributeFn ?P1))

(equal ?SP2 (ProcessAttributeFn ?P2))

(similarSets ?SP1 ?SP2)))

(<=>

(similarSetsWithBP ?BP ?S1 ?S2)

(and

(greaterThan (MultiplicationFn 2 (CardinalityFn (ElementsShareBPFn ?BP ?S1 ?S2))) (CardinalityFn ?S1))

(greaterThan (MultiplicationFn 2 (CardinalityFn (ElementsShareBPFn ?BP ?S2 ?S1))) (CardinalityFn ?S2))))

(similarSetsWithBP similarProcesses (DecisionOptionFn ?DECIDE) (DecisionOptionFn ?DECIDEV)A more ontological approach occurred to me: why does it matter that two situations are similar? Because if the situations are similar, then I will make similar judgments about them. This is fairly easy to specify and can work with many specific implementations of similarity measures. I’m not quite sure how the semantics of a recursive definition work in SUMO, to be honest. It should help to note that if two entities are equal, then they are similar for all agents (which lines up with the Leibniz approach to defining equality).

(=>

(similar ?A ?E1 ?E2)

(=>

(and

(instance ?J1 Judging)

(agent ?J1 ?A)

(patient ?J1 ?E1)

(result ?J1 ?O1)

(instance ?J2 Judging)

(agent ?J2 ?A)

(patient ?J2 ?E2)

(result ?J2 ?O2))

(modalAttribute (similar ?A ?O1 ?O2) Likely)))

(=>

(equal ?E1 ?E2)

(forall (?A)

(similar ?A ?E1 ?E2)))

(<=>

(similar ?A ?E1 ?E2)

(similar ?A ?E2 ?E1))Now we can look at the third draft of virtue ethics, which refers to the statement that a behavior is judged as morally good if and only if the judging agent believes that if this behavior results from a decision and there were to be a virtuous agent making a similar decision, then the virtuous agent is likely to decide on the same class of behaviors.

(and

(refers VirtueEthics ?STATE)

(instance ?STATE Statement)

(equals ?STATE

(<=>

(and

(instance ?JUDGE MoralJudging)

(agent ?JUDGE ?AGENTJ)

(patient ?JUDGE

(modalAttribute

(hasInstance ?CBEHAVE) MorallyGood)))

(believes ?AGENTJ

(=>

(and

(instance ?DECIDE DecidingSubclass)

(result ?DECIDE ?CBEHAVE)

(subclass ?CBEHAVE AutonomousAgentProcess)

(agent ?DECIDEV ?AGENTV)

(instance ?AGENTV VirtuousAgent)

(instance ?DECIDEV DecidingSubclass)

(similar ?AGENTJ (DecisionSubclassOptionFn ?DECIDE) (DecisioSubclassnOptionFn ?DECIDEV)))

(modalAttribute (result ?DECIDEV ?CBEHAVE) Likely))))))

The utilitarian family of paradigms was actually the hardest to sketch out. The technology for measuring physical outcomes, which again depends on some causal world model, is not well-developed in SUMO. And there’s usually an idea of aggregating the utility functions of members of a group into the utility function for the group, which is a type of detail that’s often ontologically glossed over.

Initially, I tried to use stuff like “AppraisalOfPleasantness“. What’s the point of doing this in SUMO if I’m not going to use the concepts already defined in it? Maybe we can define a utility sum function that aggregates the utility measures of the appraisals of pleasantness of each member of the group for each process that affects any member of the group?

(instance UtilityMeasure UnaryFunction)

(domain UtilityMeasure 1 AppraisalOfPleasantness)

(range UtilityMeasure RealNumber)

(instance UtilitySumFn BinaryFunction)

(domain UtilitySumFn 1 UtilityGroup)

(domain UtilitySumFn 2 Process)

(range UtilitySumFn RealNumber)Thus the first stab at utilitarianism says that when two processes, ?P1 and ?P2, are available to an agent in a group yet not both possible and the aggregate utility to the group of ?P1 is greater than that of ?P2, then ?P2 is good and ?P2 is bad. Working with decision sets, which came later, simplifies things.

(=>

(instance ?UTILITARIANISM Utilitarianism)

(containsInformation

(=>

(and

(greaterThan

(UtilitySumFn ?UG ?P1)

(UtilitySumFn ?UG ?P2))

(member ?A ?UG)

(modalAttribute

(agent ?P1 ?A) Possibility)

(modalAttribute

(agent ?P2 ?A) Possibility)

(not

(modalAttribute

(and

(agent ?P1 ?A)

(agent ?P2 ?A) Possibility))))

(and

(modalAttribute ?P1 MorallyGood)

(modalAttribute ?P2 MorallyBad))) ?UTILITARIANISM))I also defined a strict version of preference utilitarianism, which states that when all the members of a preference group unilaterally prefer agent ?A doing ?P1 to ?P2, then it is good to do ?P1 and bad to do ?P2. Most of the difficulty for preference utilitarianism lies in how to fulfill as many preferences as possible while dealing with conflicts, which is completely dodged 😎. Conflict resolution is needed in practice, and yet the core ethos can be expressed without ‘solving’ the problem: anyone who wishes to use the definition in practice will, however, need to do something 🤡🤖.

(=>

(instance ?PREFERENCEUTILITARIANISM Utilitarianism)

(containsInformation

(=>

(and

(instance ?PG PreferenceGroup)

(member ?A ?PG)

(instance ?P1 Process)

(instance ?P2 Process)

(modalAttribute

(agent ?P1 ?A) Possibility)

(modalAttribute

(agent ?P2 ?A) Possibility)

(not

(modalAttribute

(and

(agent ?P1 ?A)

(agent ?P2 ?A) Possibility)))

(forall (?MEMBER)

(prefers ?MEMBER

(agent ?P1 ?A)

(agent ?P2 ?A))))

(and

(modalAttribute ?P1 MorallyGood)

(modalAttribute ?P2 MorallyBad))) ?PREFERENCEUTILITARIANISM))For draft 2 with utilitarianism, I need to mention that I started to experiment with having GPT-4 (via the ChatGPT interface) do SUMO formalizations with me. I politely introduced the project and showed it the core definitions of ethics, moral judging, deontology, and virtue ethics. Then I asked our kind emissary of digital intelligence,

Now I’m going to start sketching out definitions of Utilitarianism.

I will start with Hedonistic versions (in line with Bentham): “Hedonism = the value of the consequences depends only on the pleasures and pains in the consequences”.

An action will be morally good if it causes pleasure (or more pleasure than any other possible action) and morally bad if it causes pain (or more pain that some other possible action). Can you provide a definition of Utilitarianism in SUMO?

(subclass Utilitarianism Ethics)

(documentation Pleasure EnglishLanguage "Pleasure refers to a positive, enjoyable, or satisfying feeling or experience.")

(instance Pleasure PsychologicalAttribute)

(documentation Pain EnglishLanguage "Pain refers to an unpleasant sensory or emotional experience, such as physical discomfort, distress, or suffering.")

(instance Pain PsychologicalAttribute)

(documentation Consequence EnglishLanguage "A Consequence is an event or condition that follows, results from, or is caused by another event or condition, typically an action.")

(subclass Consequence Event)Comrade GPT-4 started fairly reasonably; however, pleasure is defined as an instance of an emotional state, which is actually a psychological attribute, so this isn’t wrong. (Maybe Comrade Bing would be better at this role, although I’ve seen it misquote online sources anyway 🤷♂️.) There’s no event in SUMO, alas. The definition offered of a utility function is good enough that I kept it as is. The magical “black box” function that maps an autonomous process to a real number 😏😎. Maybe we should just use this at the ontological level, allowing users to provide implementations as needed.

(documentation UtilityFn EnglishLanguage "A UnaryFunction that maps an instance of AutonomousAgentProcess

to the net utility it creates. In the case of hedonistic utilitarianism, this may be (pleasure - pain).")

(domain UtilityFn 1 AutonomousAgentProcess)

(instance UtilityFn TotalValuedRelation)

(instance UtilityFn UnaryFunction)

(range UtilityFn RealNumber)The definition of hedonic utilitarianism is also pretty good. I need to let it know that “>=” is “greaterThan” in SUMO. Second, it’s simply quantifying over all actions and not the possible actions in the circumstances. Maybe I am expecting a lot from Comrade GPT-4.

;; A morally good action according to Hedonistic Utilitarianism

(and

(refers HedonisticUtilitarianism ?STATE)

(instance ?STATE Statement)

(equals ?STATE

(<=>

(and

(instance ?JUDGE MoralJudging)

(patient ?JUDGE

(modalAttribute ?BEHAVE MorallyGood)))

(and

(instance ?BEHAVE AutonomousAgentProcess)

(forall (?OTHERBEHAVE)

(=> (and

(instance ?OTHERBEHAVE AutonomousAgentProcess)

(not (equal ?BEHAVE ?OTHERBEHAVE)))

(>= (UtilityFn ?BEHAVE) (UtilityFn ?OTHERBEHAVE))))))))

So I asked it to try again:

I see two options.

1) Simply measure behavior as good or bad if they have positive or negative utility.

2) Define the moral judgment in the context of a decision and use DecisionOptionFn to compare the utility of all the possible options in this instance of Deciding.

Can you provide both these versions of HedonisticUtilitarianism?

;; A morally good action according to Hedonistic Utilitarianism (version 1)

(and

(refers HedonisticUtilitarianism ?STATE)

(instance ?STATE Statement)

(equals ?STATE

(<=>

(and

(instance ?JUDGE MoralJudging)

(patient ?JUDGE

(modalAttribute ?BEHAVE MorallyGood)))

(and

(instance ?BEHAVE AutonomousAgentProcess)

(greaterThan (UtilityFn ?BEHAVE) 0)))))

;; A morally good action according to Hedonistic Utilitarianism (version 2)

(and

(refers HedonisticUtilitarianism ?STATE)

(instance ?STATE Statement)

(equals ?STATE

(<=>

(and

(instance ?JUDGE MoralJudging)

(patient ?JUDGE

(modalAttribute ?BEHAVE MorallyGood)))

(and

(instance ?DECIDE Deciding)

(result ?DECIDE ?BEHAVE)

(forall (?OPTION)

(=> (member ?OPTION (DecisionOptionFn ?DECIDE))

(greaterThanOrEqualTo (UtilityFn ?BEHAVE) (UtilityFn ?OPTION))))))))

I think that version 1, while simple, is quite good. All the complexity is swept under the rug of the sacred utility function, and that’s perfectly ok. An action is good if and only if its utility is greater than zero. Done.

I think version 2 is also pretty good: if a behavior is better than all other options, then it’s good to do. I dropped the “if and only if” because we may wish to judge behaviors not resulting from a decision.

For draft 3 of version 1, I kept everything essentially the same aside from lifting the judgments to correctly be over formulas and classes. For what it’s worth, “hasInstance” is a helper function I defined because writing out “exists (bla) (instance bla class)” over and over again felt really tedious. I’ll probably need to remove these to not clutter up the official SUMO repository with local definitions — maybe an LLM can undo all the shorthand for me 😉.

(documentation UtilityFn EnglishLanguage "A UnaryFunction that maps an instance of AutonomousAgentProcess

to the net utility it creates. In the case of hedonistic utilitarianism, this may be (pleasure - pain).")

(domainSubclass UtilityFn 1 AutonomousAgentProcess)

(instance UtilityFn TotalValuedRelation)

(instance UtilityFn UnaryFunction)

(range UtilityFn RealNumber)

(and

(refers Utilitarianism ?STATE)

(instance ?STATE Statement)

(equals ?STATE

(and

(<=>

(and

(instance ?JUDGE MoralJudging)

(result ?JUDGE

(modalAttribute (hasInstance ?CBEHAVE) MorallyGood)))

(greaterThan (UtilityFn ?CBEHAVE) 0))

(<=>

(and

(instance ?JUDGE MoralJudging)

(result ?JUDGE

(modalAttribute (hasInstance ?CBEHAVE) MorallyBad)))

(lessThan (UtilityFn ?CBEHAVE) 0)))))

(<=>

(hasInstance ?CLASS)

(exists (?INSTANCE)

(instance ?INSTANCE ?CLASS)))Draft 3 of version 2 is also mostly the same as what comrade GPT-4 offered.

(and

(refers Utilitarianism ?STATE)

(instance ?STATE Statement)

(equals ?STATE

(=>

(and

(instance ?DECIDE Deciding)

(agent ?DECIDE ?AGENT)

(result ?DECIDE ?CBEHAVE)

(subclass ?CBEHAVE AutonomousAgentProcess)

(forall (?OPTION)

(=>

(member ?OPTION (DecisionOptionFn ?DECIDE))

(greaterThanOrEqualTo (UtilityFn ?CBEHAVE) (UtilityFn ?OPTION)))))

(and

(instance ?JUDGE MoralJudging)

(result ?JUDGE

(modalAttribute

(exists (?IBEHAVE)

(and

(instance ?IBEHAVE ?CBEHAVE)

(agent ?IBEHAVE ?AGENT))) MorallyGood))))))That’s all for draft 3, actually. In draft 2, I tried to find some way to specify what it means for a moral judgment to depend only on the consequences of the act. So let’s say that an outcome is a physical entity if and only if there exists a process of which it is the result, so it’s quite wrapped up in the case role. Generally, let’s say that an outcome begins after the process it results from (as there could be some overlap in duration).

(documentation Consequentialism EnglishLanguage "Consequentialism is a moral theory that holds that

'whether an act is morally right depends only on consequences (as opposed to the circumstances

or the intrinsic nature of the act or anything that happens before the act)' (Stanford Encyclopedia of Philosophy).")

(subclass Consequentialism Utilitarianism)

(subclass Outcome Physical)

;; O is an outcome if and only if there exists some process P such that O is the result of P.

(<=>

(instance ?OUTCOME Outcome)

(exists (?P)

(and

(instance ?P Process)

(result ?P ?OUTCOME))))

;; If O is an outcome of P, then P begins before O.

(=>

(and

(instance ?OUTCOME Outcome)

(result ?P ?OUTCOME))

(before

(BeginFn (WhenFn ?P))

(BeginFn (WhenFn ?OUTCOME))))Because consequentialism is a subclass of utilitarianism, I only need to specify that the conclusion of the moral judgment only depends on the consequences. So for every moral judgment concluding ?C, there exists an argument concluding ?C where all physical premises are outcomes of the behavior, and no premise refers to an attribute of the agent nor to a manner of the behavior.

(and

(refers Consequentialism ?STATE)

(instance ?STATE Statement)

(equals ?STATE

(=>

(and

(instance ?JUDGE MoralJudging)

(result ?JUDGE ?CONCLUSION)

(or (equal ?MoralAttribute MorallyGood) (equal ?MoralAttribute MorallyBad))

(equals ?CONCLUSION

(modalAttribute ?BEHAVE ?MoralAttribute)))

(exists (?ARGUMENT)

(and

(instance ?ARGUMENT Argument)

(conclusion ?ARGUMENT ?CONCLUSION)

(agent ?BEHAVE ?AGENT)

(forall (?PROP)

(and

(=>

(and

(premise ?ARGUMENT ?PROP)

(refers ?PROP ?PHYS)

(instance ?PHYS Physical))

(and

(instance ?PHYS Outcome)

(result ?BEHAVE ?PHYS)))

(=>

(premise ?ARGUMENT ?PROP)

(and

(forall (?ATT)

(=>

(attribute ?AGENT ?ATT))

(not (refers ?PROP ?ATT)))

(forall (?MAN)

(=>

(manner ?MAN ?BEHAVE)

(not (refers ?PROP ?MAN)))))))))))))I find this approach of getting at the dependence of the moral judgment on the consequences very roundabout, yet I had trouble finding something more direct. While I was impressed with comrade GPT-4’s extrapolation in the utilitarianism case, it seems to struggle with these indirect paths.

Comrade GPT-4 seemed very useful for helping with boilerplate code (which is what GitHub Copilot is doing). In SUMO, when defining humans, one needs to specify that they’re all unique. This becomes quite tedious, so being able to tell an AI code assistant what one wants is really nice. Moreover, when I decided to use a unique list for brevity, comrade GPT-4 had little trouble figuring it out.

;; The humans involved

(instance ?MORALAGENT Human)

(instance ?PERSON1 Human)

(instance ?PERSON2 Human)

(instance ?PERSON3 Human)

(instance ?PERSON4 Human)

(instance ?PERSON5 Human)

(instance ?PERSON6 Human)

(not (equal ?PERSON1 ?PERSON2))

(not (equal ?PERSON1 ?PERSON3))